We asked Nemanja Jovanovic, lead instrument scientist at Caltech’s Optical Observatory and Exoplanet Technology Laboratory, about emerging astrophotonic technologies on his radar

Why is it important for you and your team to stay up-to-date on the latest astrophotonic technologies?

Our day job is to develop extremely customised astronomical instruments for observatories using any technologies we like. We develop photonics technologies in the context of characterising them and optimising them for astronomy, so that one day they can be integrated into instruments that have a competitive advantage. In doing this we look to embrace new technologies every time we develop a new instrument.

You recently attended SPIE’s Astronomical Telescopes + Instrumentation conference and spoke on their Emerging Technologies panel – what piqued your interest here?

One thing that stood out to me in particular at the conference was the increasing usage of artificial intelligence (AI) and neural networks in astronomy. The technology is finding applications in processing huge datasets that are too large to process using conventional methods.

In the Emerging Technologies panel discussion, Laurence Perreault Levasseur,of the University of Montreal, showed low signal-to-noise-ratio spectra that had been put through an AI system and reconstructed with much higher fidelity. She showed that you could nail down the various noise sources of a detector better and understand them and correct for them to output a high-quality spectrum. I thought this was very powerful given that spectral extraction is a huge part of astronomy – half our data sets are spectra, while the other half are images – and so these AI-powered techniques are looking to be exceptionally powerful.

In addition, throughout the rest of the conference there were back-to-back presentations featuring neural networks being introduced into other applications of astronomy, such as those featuring adaptive optics and image reconstruction. I was blown away not only by how many people were using them, but also by how effective they were. Now that’s not to say neural networks don’t have their issues – e.g. if you don't train them correctly you can end up with a bias – but I was shocked and amazed at just how many talks there were on neural networks and how effective its already becoming in astronomy. It’s definitely growing in prevalence.

Nemanja Jovanovic is lead instrument scientist at Caltech’s Optical Observatory and Exoplanet Technology Laboratory.

Another takeaway from the conference for me was the ongoing progress being made in tackling two of the main limitations of detectors in astronomy: readout noise and dark current – observations are typically limited by one or the other. In the Emerging Technologies discussion, Eric Fossum of Dartmouth University presented sCMOS single-photon-counting detectors that get around the read noise limit, which enables many reads to be performed very quickly without being penalised. This will be important in aspects like high frame rate imaging and wavefront sensing. Then later on, in one of the detector sessions, I saw an approach that almost completely eliminates dark current. Michael Bottom’s team from the Institute for Astronomy in Hawaii partnered with Leonardo to present a paper showing a 1-megapixel avalanche photodiode array – a mercury cadmium telluride detector – where the dark current was so low that it was immeasurable. This is critical to be able to take spectra of extremely faint sources like terrestrial exoplanets and the earliest galaxies.

In addition to presenting the cutting-edge, why are events and discussions like these important in the astrophotonics community?

These types of conferences and discussions do an excellent job of breathing new life into the community and shedding light on a few interesting technologies that could get people thinking. This is particularly important in the wake of the James Webb Space Telescope (JWST) launch and the release of its first images, as there will now be a push towards developing technologies for the next flagship mission, which despite being 20+ years away from launch presents deadlines much closer than that. This is because NASA missions are typically required to be very technologically conservative. For example, despite the JWST only being launched last year, while it's doing absolutely transformative science, the technology on there is actually around 10-12 years old. This is because it had to reach a level of readiness, reliability and robustness by the time of launch, and so the technologies involved had to be down, selected and locked much prior to that.

This is why it’s important to shed light on these new technologies now for the next flagship mission. It not only needs an injection of technology, but we also need to break some of these cost scaling ideas and be able to develop something with even better science capabilities without a consequent exponential growth in price. This was highlighted in particular by NASA’s Chief Technologist, Mario Perez, on the Emerging Technologies panel, who will ultimately be the one who can convince NASA to invest in certain technology areas.

Which technologies are exciting you and your team right now?

We're working on several key areas that are all quite important to astronomy:

Spectrographs on a chip

For the first area, we’re trying to develop spectrographs on a chip. Now while there are multiple ways of doing this, one that's advanced the most over the last two or three decades is definitely arrayed waveguide gratings (AWGs). These are mostly used in telecom systems for splitting the channels of a densely multiplexed comms line, but we wanted to see if we could re-optimise them for astronomy. And so over the last few years we’ve been altering the bandwidth and resolution in the NIR around 1,550nm. We've been steamrolling multiple developments to do this, mostly using a silicon nitride platform.

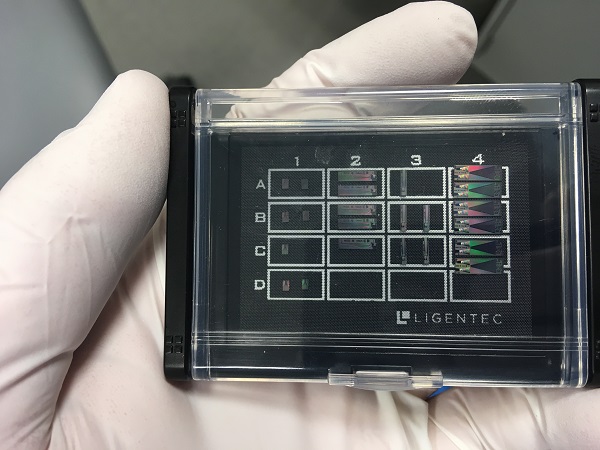

For this work we've been following a slightly different approach to developing photonics to most research institutes, in that we've been outsourcing a lot of it. While this is expensive, it's not more so than building a fab and hiring a professional photonics engineer to try to do it in-house. Our main designer has been Bright Photonics, they are world-leaders in AWG design and do a fantastic job. Then we outsource to LioniX International or Ligentec for the fabrication and then PHIX Photonics Assembly for the packaging. With those teams we've just managed to fabricate and demonstrate spectrographs with resolving powers up to about 43,000, which corresponds to roughly 40 picometre channel spacing on an AWG.

Nemanja Jovanovic’s team is developing spectrographs on a chip with resolving powers an order of magnitude above what telecoms firms are capable of. (Image: Jovanovic et al.)

That's about a factor of 10 above what telecoms firms are doing. So we’ve just developed, demonstrated and delivered a suite of these spectrographs that start to push to higher resolving powers and push that phasing limit. We're also testing broadband devices that are lower resolution than the ones that the industry makes, so very broadband – instead of doing a 50-nanometre chunk in the telecoms band, we're trying to do 500-600 nanometres of bandwidth.

Through these two directions of development, we’re looking to produce spectrographs that are more generally applicable and customisable to astronomy applications. However, with spectroscopy being used in every single field – medicine, biophotonics, and more – these new devices could have applications in a broad range of areas in addition to astronomy.

Photonics lanterns

The second area we're working on is photonic lanterns. These are basically fibre waveguide devices that have multi-mode input and multiple single-mode outputs. They convert from a large multi-mode beam to a single-mode beam, and the reason we like that in astronomy is that when you have a multi-mode input, you have an easier time collecting light, especially at the telescope because the beam is aberrated – it's come through the atmosphere, with wavefront error on it, twinkling and changing with time. Adaptive optics systems can be used to address this to a degree, however they’re not perfect. And so the lantern has the ability to collect a little more light and convert the output using a single-mode fibre, which is important because single-mode fibres are time-invariant – their output doesn’t change shape, only gets brighter or fainter. This enables equipment to be calibrated at much higher levels of quality.

Photonic lanterns are also interesting because they have the ability to split incoming light amongst their output ports, and so if you want to send one output to a spectrograph and then the others to signal monitoring and closed-loop wavefront control systems, you can do that. In fact, linking back to my previous point, one of the papers I saw presented at the conference, by Barnaby Norris from the University of Sydney, was about using a neural network to perform wavefront control via a photonic lantern. That's another interesting application of the technology because they managed to perform open-loop reconstruction, which indicates they can work out what the wavefront is, and then in a later step they will be able to close the loop to stabilise the beam.

Photonics lanterns also have applications in telecoms, where they’ve already started to look at them for spatial division multiplexing in networks, and so these techniques we’re developing in astronomy will also be very much applicable to telecoms-type systems.

Nulling interferometry

A third area we’re working on is nulling interferometry, which is useful when you're trying to detect exoplanets. As these are incredibly faint compared to the stars, you need to cancel out the starlight as much as you can in order to see them. We’re looking to achieve this using interferometric nulling. Here you take different portions of a telescope’s pupil and destructively interfere the signals in a way that cancels out the starlight, which allows the planet light to leak through. While you can never cancel out the starlight perfectly, you can reduce the number of stellar photons by orders of magnitude, enough to actually be able to detect the planet in their presence.

There are two ways of doing this using photonics. One is to use a beam combiner chip featuring different waveguides, with each one picking up a segment of the pupil so the nulling process can be used to cancel out the starlight. You then look for the leaked planet light and can also reconstruct the spectrum to determine some of the physical properties of the star. We incorporated this into the Guided-Light Interferometric Nulling Technology (GLINT) equipment now in use at the Subaru Telescope. The other way is by actually using optical fibres and photonic lanterns in a non-conventional way called fibre nulling. In this case we leverage single-mode fibres and photonic lanterns to generate the equivalent of a nuller, and then use that to be able to do spectroscopy. And we have an example of that right now deployed at the Keck Telescope, where it's already starting to help us detect faint companions that we wouldn't have been able to characterise easily otherwise.

Will you be looking to commercialise these technologies beyond individual observatories?

We ourselves won’t be as our interests are purely in advancing astronomy, however that’s not to say they aren’t commercialisable. If we did choose to go in that direction, once we've managed to develop the photonic components to the levels we want, we could consider leveraging the high replicability of the chip format. For example, we could integrate a spectrograph into a small module that could then be deployed to multiple telescopes and even small payloads for drones or small satellites, not just for astronomy, but also for Earth science – monitoring the planet’s oceans and atmosphere, for example.