Anita Chandran explores how compact lidar sensors are contributing to new developments in augmented reality and beyond

Light detection and ranging (lidar) sensors are robotic eyes that allow autonomous vehicles to gauge distance, enable consumers to trial products on themselves and in their homes before purchase, and give scientists the tools to conduct important environmental monitoring at a distance. Lidar is a powerful tool, but has in the past relied on bulky, complex or expensive systems to ensure the required laser beam can be scanned at high speeds. Now, a push to miniaturise beam-scanning optics has resulted in compact lidar technology, with researchers and consumers finding more and more uses for lidar in their daily lives.

One of the first movers in realising compact lidar technology was Apple, which in 2020 added a lidar sensor to its iPhone 12 Pro. The hardware is expected to remain in Apple technology until at least the iPhone 15 Pro models. The logical assumption might be that the introduction of a lidar sensor in the iPhone was overkill: it is an energy-expensive technology whose addition as an auxiliary feature might seem wasteful. But the technology has numerous advantages in everyday scenarios, such as improving photography in low light conditions. The lidar sensor can work in combination with the iPhone’s camera to correct difficulties in focusing by measuring close-range distances. This helpful benefit to photography is just one of the reasons the technology was introduced to the iPhone; its main purpose is to help in the development of smartphone-based augmented reality (AR).

Lidar from the iPhone works in principle similarly to any other lidar system. The iPhone emits a laser beam. In the case of the iPhone Pro 12 and 13 models, this is given by a 64-cell block of vertical cavity surface emitting lasers (VCSELs) developed by Lumentum and WIN Semi, which are multiplexed to an array of 576 pulses by a diffractive optical element. This array is reflected from a surface and re-measured using a single-photon avalanche diode (SPAD) sensor in the iPhone. The time elapsed between emission and return gives a map of distance. Using 576 laser points improves the speed of the scan, enabling numerous data points to be collected at once.

Apple is pushing hard to improve the practicality of lidar in its smartphones, allegedly replacing both the Lumentum and WIN Semi VCSELs with Sony components in the iPhone 15, set to launch later this year. The change will reportedly improve the iPhone’s battery life or improve performance for the same power consumption. This promises to push down costs and increase the practicality of handheld or smartphone lidar systems. It also promises underpinning hardware for Apple’s long heralded Apple Vision Pro: wearable smartglasses integrated with AR technology.

Apple was one of the first to integrate a lidar sensor into a smartphone, having added it to the imaging arsenal of the iPhone 12 Pro in 2020 (Image: Shutterstock/Andrew Will)

Google has also been involved in lidar for the smartphone through its Project Tango, an AR platform currently only available on two models of Google Phone. The company replaced its lidar sensors with computer vision algorithms for estimating depth sensing without the hardware. Although cheaper, computer vision is not as accurate as lidar sensing due to it having weaker distance measurement and depth perception.

Apple’s motivation for better, more accurate and more compact lidar systems most likely comes from its desire for totally integrated AR in a wearable product, namely its Vision Pro smartglasses, touted for launch in Q1 2024. Through a combination of multiple high-resolution cameras and a lidar scanner, the Apple Vision Pro targets delivery of automatic 3D rendering of the user’s surroundings, allowing them to ‘navigate’ through virtual space and interact with it via gesture recognition.

The Vision Pro remains a steep engineering challenge, with concerns remaining about how well integrated the user’s experience will be with their surroundings, but also surrounding the availability of the intricate and high-level technology required to make the smartglasses practically affordable and possible to manufacture in volume (they launch at an eye-watering $3,500).

Seeing (in) the future

Apple is not the only company working on developing better, more compact and cheaper technology for wearable AR and lidar applications. OQmented is a spin-off company from the Fraunhofer Institute for Silicon Technology, founded in 2018 by Thomas von Wantoch and Ulrich Hofmann. The company is a supplier of so-called ‘light engines’ or micro display modules that can be used for wearable AR technology as well as periphery-enabled lidar applications.

“Currently existing AR smartglasses and AR glasses still show several shortcomings,” Hofman says, listing them as: “Too bulky form factor, too heavy, too high power consumption, too short operating time, and no eye-contact possible because of so-called ‘eye-glow’, and they’re not acceptable as consumer products because of destruction of the user’s appearance.”

OQmented’s display technology is based on laser beam scanning (LBS). The technology uses a combination of a laser and a micro electro mechanical system (MEMS) mirror to circumvent the shortcomings of modern AR products. Three laser beams – one red, one blue and one green – are combined into a single RGB beam, which is directed onto a custom bi-axial and bi-resonant MEMS mirror. This mirror deflects and scans the beam horizontally and vertically at high speeds. Using their own in-house developed algorithms to synchronise the scanning of the beams horizontally and vertically, OQmented is able to generate high-resolution, high-contrast, and high-brightness images without the bulky footprint of traditional lidar laser scanners. Its technology enables miniaturised display modules with volumes lower than half a cubic centimetre that the company stresses are integratable into stylish AR glasses, but not without additional engineering.

“For AR glasses applications this light engine is not enough. An additional combiner is needed that combines and overlays the displayed digital synthetic image information with the user’s world view,” Hofman explains. “Frequently this combiner is a diffractive waveguide or a reflective (geometric waveguide) or a holographic combiner. The waveguides then actually build the lenses of the AR glasses.”

“LBS enables the usage of the AR glasses also in bright daylight,” Hoffman continues. “OQmented’s technology is also unique with respect to its low power consumption. A few weeks ago a world record was achieved when it was demonstrated that a MEMS mirror and driver ASIC consume only about 6mW of power, which is one of the keys for extremely long operating time needed for real consumer AR glasses.”

OQmented supplies its modules to big consumer brands, and aims to see its products make it into different generations of AR products step-by-step, with its first introduction in 2025. The company also aims to utilise its scanners in automotive systems, for improving peripheral vision.

For the future of AR, the company believes its LBS technology is more promising than its rival: a combination of microLED and lidar, long heralded as an industry leading solution. This is because microLED systems require a tradeoff between pixel size and efficiency. LBS solves some of these issues, in particular requiring far fewer optics than microLED systems, enabling a boost in efficiency.

360-degree vision

Researchers are also targeting ways of improving peripheral vision using lidar sensors. A team of researchers has now developed a new way of enabling 360-degree vision based on lidar by utilising metasurfaces, artificial surfaces designed to alter the propagation of light depending on its frequency. These nano-optical metasurfaces give the potential for a view free of blindspots from a smartphone lidar sensor.

Lidar traditionally measures distance by projecting light onto objects and measuring the time it takes for the light to return to the lidar sensor. In most applications, including AR, it is also ideal for lidar sensors to be able to experience peripheral vision, as well as to see behind oneself. This aids immersion and the flexibility of applications, but normal lidar sensors cannot accommodate this.

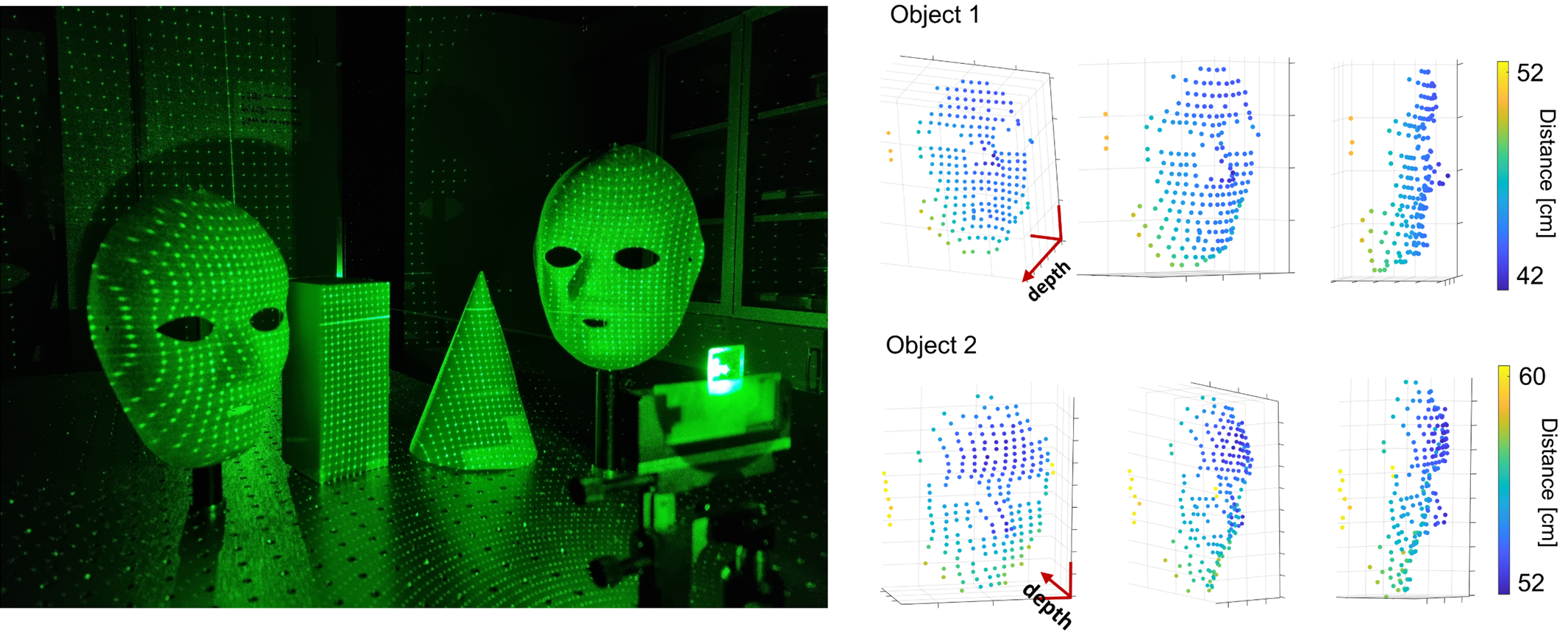

“Our method, referred to as active stereo, utilises structured light to illuminate a scene,” says Professor Junsuk Rho, of the Departments of Mechanical and Chemical Engineering at the Pohang University of Science and Technology, part of the team that published their method in Nature Communications. “Conventional illumination modules, such as diffractive optical elements, have limitations in projecting a wide field-of-view due to their micron-sized pixel pitch. In this research, we leverage novel nanostructures called metasurfaces to expand the field-of-view up to 180 degrees.”

Researchers at the Pohang University of Science and Technology are using a nano-scale metasurface lens to split a single laser beam into 10,000 points across a full 180-degree field of view (Image: Postech)

The ultrasmall metasurfaces are capable of projecting arrays containing over 10,000 laser dots onto target objects, enabling users to access 3D information in a 360-degree scanning region. This not only expands the field of view of traditional lidar devices, with consequences in AR, virtual reality and autonomous vehicles, but also drives miniaturisation of lidar sensors using nanostructures.

“Our metasurface-based active stereo does not necessitate expensive sensors and laser sources,” Rho continues. “Active stereo does not require rotation and can simultaneously reconstruct depth maps over a 180-degree range.”

The project is currently still being developed in the laboratory, with some hurdles produced by the working distance of the system, which Rho describes as “personal-space limited”. The researchers believe these challenges can be overcome through the integration of better laser sources, and the team intend to roll the technology out after further integration with such sources and ultrasmall cameras.

Coast-to-coast monitoring

The development of lidar in smartphones such as the iPhone has also led to breakthroughs in other, more unexpected areas of research such as environmental monitoring. Geography PhD fellow at the University of Copenhagen, Gregor Lützenburg, has recently developed an environmental monitoring tool based on the iPhone 12’s in-built lidar sensor.

“I am interested in landscape changes and looking at how coastlines change over time. Lidar is a really nice technique because we can get 3D data so that when we look at, for example, a cliff, we can see how it changes over time. Not just the position of the coastline, but how the cliff evolves in 3D,” he says. “Before lidar on the iPhone we always had to use very fancy and advanced equipment, which was difficult to use and also heavy to carry, as well as being expensive to acquire.”

The iPhone’s lidar sensor was not only small and cheap, but also enabled users to make measurements without prior knowledge and training. Lützenburg believes this could possibly enable large-scale citizen science applications.

“Citizen science becomes very easy with this kind of tool because so many people have an iPhone and so many people walk the dog, or go for a hike along the beach,” he adds. “We can just say ‘hey, if you’re there anyway, you can use that iPhone that’s in your hand to make a 3D model of the coast and send it to us to measure changes in the landscape’.”

University of Copenhagen researchers have developed an environmental monitoring tool that uses a smartphone-based lidar scanner to capture 3D data of coastlines (Image: Lützenburg et al.)

Though the technology has shown extremely promising results, there are some limitations to Lützenburg’s monitoring technique, mostly related to the practical shortcomings of the sensor technology. Partly, this is to do with imaging references: for citizen science applications, each picture of a landscape has to be calibrated to the same reference point, which requires a lot of time-consuming post-processing. The iPhone lidar sensor is also not capable of measuring every type or surface.

“The scanner doesn’t really work on water or reflecting surfaces. So if you’re trying to use it in a mirror or point it through a window, that won’t really work,” Lützenburg continues. “But if you’re in an outer environment, like a beach with some vegetation, then it works well.”

Lützenburg also stresses that one of the biggest issues with smartphone lidar, just as Rho says, is the working distance. Unlike other, contemporary lidar systems, the technology developed for environmental monitoring is limited to a distance of around five metres, so the user has to be relatively close to the relevant geographical feature. For large features, such as mountains, the technology then struggles to compete. This is not necessarily a dealbreaker, Lützenburg stresses.

“This is just the first generation smartphone lidar hardware. It was introduced in 2020 and the hardware hasn’t been updated yet. It’s basically about power supply: the more power you allow it to consume, the higher the laser energy, and the longer its range.”

As the technology evolves, and smartphone lidar systems become more powerful and more practical, there will be huge improvements not only to AR, but to the enormous offshoot benefits such as Lützenburg’s environmental monitoring app. Such benefits have the potential to make big strides for citizen science, but also for regular consumers. With lidar, the application space is only going to grow.