Anita Chadran reveals the photonics technologies pushing the boundaries of smartphone photography

In the last 15 years, the smartphone has become one of the most indispensable tools in modern life. We keep everything on our phones: our credit cards, our calendars and, for most people, our cameras. For many, the smartphone has supplanted traditional “point-and-shoot” cameras, with smartphones accounting for more than 89% of all photographs taken in 2022 according to Statista, a number that photo management firm Mylio says is set to rise to more than 90% in 2023.

The desire for better smartphone cameras can also often drive consumer choices. In terms of marketing, smartphone manufacturers hasten to highlight the improvements in their smartphone cameras, centering optical parameters such as sensor size, number of megapixels and options for video stabilisation, as well as protrusion and weight.

The increasing demand for better, more professional and more streamlined smartphone cameras, alongside a desire for more functionality such as improved night vision photography, is pushing manufacturers to develop new optical technologies. At varying levels of readiness, from laboratory research to roll-out, these technologies are now being deployed in handheld devices.

Superlative sensors for DSLR-quality shots

In direct competition with state of the art DSLRs, point-and-shoot camera manufacturers are now getting involved in the smartphone game. Leica Camera (Leica), is a manufacturer of cameras, lenses and imaging devices. The company has recently released its second own-brand smartphone, the Leitz Phone 2, with the aim of bringing signature Leica optics to the smartphone platform.

The Leitz Phone 2 claims to retain the “Leica look” in smartphone photographs by using software to emulate three of its M-lenses: the Summilux 28, Summilux 35 and Noctilux 50. This software, called the “Leitz Looks” mode, is what Leica says enables its smartphone to generate the traditional Leica “bokeh” in smartphone photography. The move for “computational photography” is already seen in most top-end smartphone brands, with post-processing tools being employed to render sharper images with flexible colour balancing.

“The Leitz Phone 2 stands out with a huge 1-inch sensor in combination with the latest Snapdragon chipset,” says Julian Burczyk, Head of Product Management Mobile at Leica. “From a software perspective the “Leitz Looks” mode, an artificial simulation of famous Leica lenses, is the key feature of the second Leitz Phone generation.”

The 1-inch CMOS image sensor is also a recent and revolutionary addition to the smartphone arsenal globally. Introduced by Sony in 2022, the IMX989 sensor is the first 1-inch sensor for smartphones, huge in the context of the device. While other 1-inch sensors are installed in smartphones such as Sony’s Xperia PRO-I, these all leverage sensors made for digital cameras and re-purposed for smartphones. The IMX989 is the first of its kind that rivals the size of a traditional digital camera, a milestone in the development of smartphone camera technology.

Large image sensors undeniably provide benefits to smartphone cameras, providing better natural blur to photographs, as well as improving performance in dark or complicated photographic environments. The sheer size of the sensor also improves light collection, rendering images faster and with a greater volume of information. Sony’s IMX989 sensor is now in numerous smartphones worldwide, including the Leitz Phone 2, Vivo X90 Pro Plus and Xiaomi 13 Pro as well as other models from these brands. More companies are expected to follow suit in 2023.

“The hardware itself is already at a very good level and of course we will remain using top-level components for building the smartphone itself,” continues Burczyk. “The limited space in a smartphone for bigger lenses will, in the future, be balanced out with even better software and algorithms such as the Leitz Looks.”

True to colour

“Smartphone cameras are extremely advanced. Really, they’re pieces of art. But they’re not able to take a colour picture,” says Jonathan Borremans, CTO of Spectricity, a company specialising in spectral sensing solutions. “What we say is that they’re colour blind, and this is recognised by smartphone vendors, the press and by consumers.”

When human eyes record an image, the brain interprets that image and calibrates the understanding of what is seen. This is why, irrespective of the lighting conditions, be they a bright sunny day or a dimly lit room, white paper looks white to the human eye. Smartphones use the same process of “white balancing” to mimic this process, without the aid of context clues. When humans see images that have not been white balanced, or where the white balancing is not correctly calibrated, we feel that the images we see may look dull or lifeless. This phenomenon is what makes optical illusions effective, but is something that camera manufacturers grapple to correct.

Smartphone cameras can do colour balancing automatically, inferring details from the colour temperature of the light source in the background. This refers to how blue or orange (cold or warm) a light source is, and also the type of source itself (fluorescent lighting or LEDs, for example). But without using a well-defined source of lighting, such as studio lighting, true colour balancing is impossible even for the most sophisticated of smartphones. This means that photographs taken with smartphone cameras differ in colour from the original subject of a photograph, especially where multiple sources of lighting are involved.

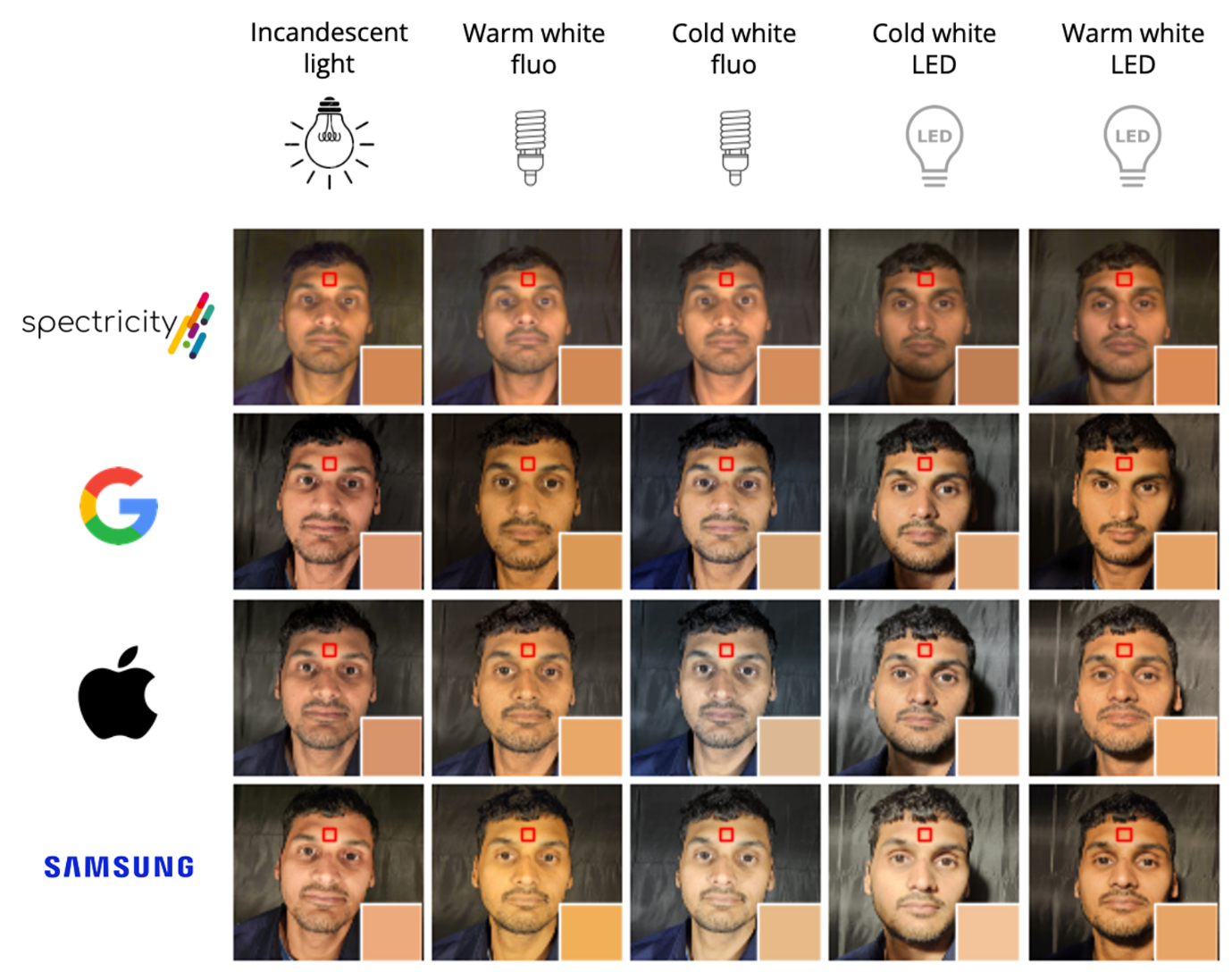

The lack of consistency across different lighting conditions can cause many problems, but pose a particular challenge to people of colour, who struggle to match their skin-tone in different lighting conditions. Spectricity is a company tackling this problem through its S1 sensor, a new, first-of-its-kind multispectral image sensor for smartphone cameras.

Spectricity’s colour balancing technology at work against a number of competitor techniques, for different sources of illumination. (Image: Spectricity)

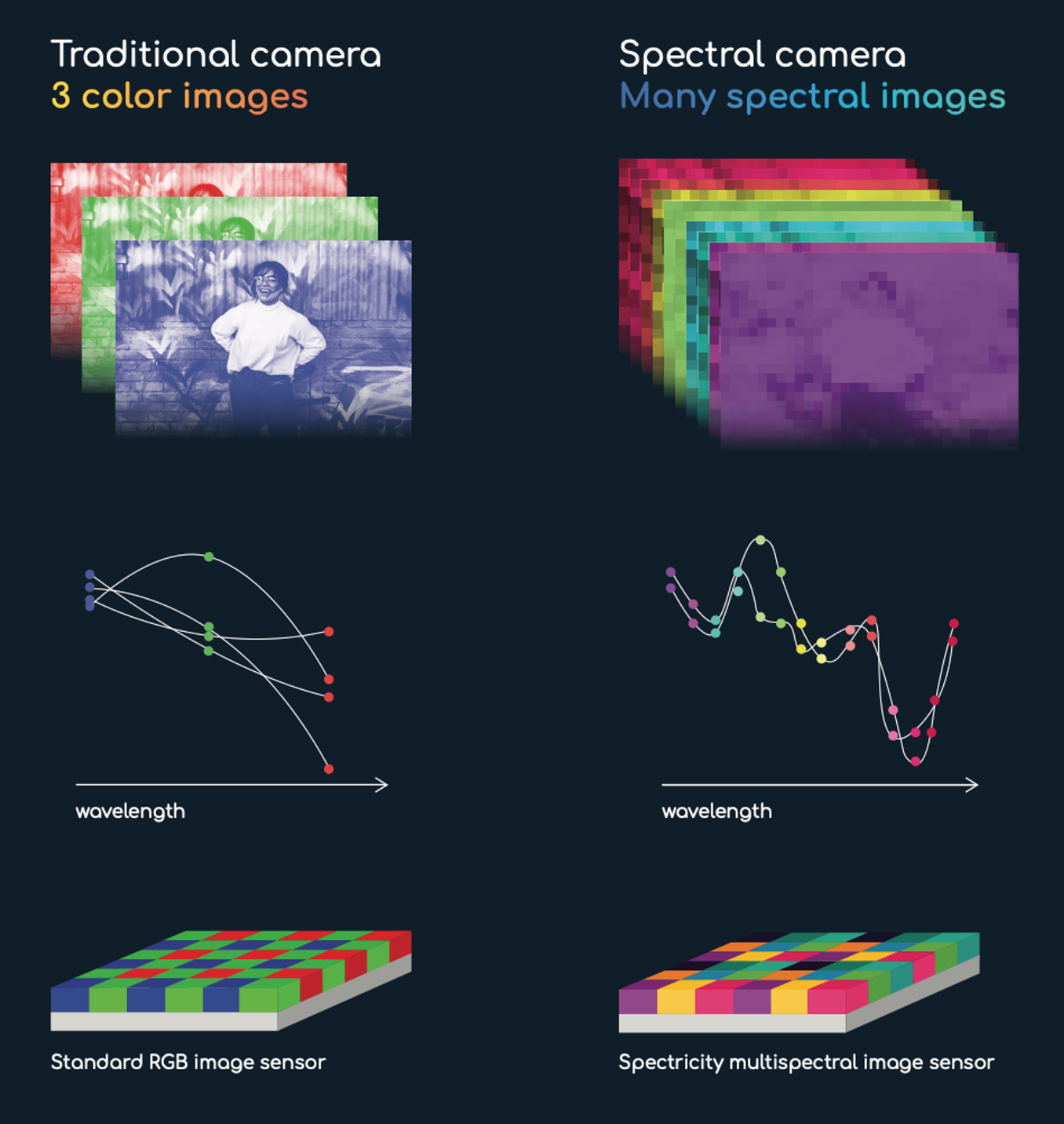

“A normal camera basically measures colour with red, green and blue filters,” says Borremans. “It is a big chip that has red, green and blue filters on it to filter the light and form a colour picture. At Spectricity, we can build in more different filters so that we can split the light spectrum into more pieces. This means we can more accurately reconstruct colours.”

Spectricity’s S1 sensor is a powerful combination of new multispectral filter technology and computational imaging algorithms. By using an array of very small filters, and many more of them than in standard smartphones, they are able to accurately reconstruct the colour of images irrespective of the colour temperature of the lighting source, and irrespective of the number of sources of illumination that exist. Spectricity’s sensors boast 16-channel operation across the visible and near-infrared, compared with the eight-channel operation in Huawei’s multispectral cameras housed in its latest P40 Series smartphones.

Other companies have also been working on improving their colour reproduction. Huawei’s P30 series replaced RGB sensors in its phones with RYYB sensors, and improved upon these in its latest models. Numerous other devices, including the newest LG G5 launched in 2016, also include spectral sensors.

Borremans and the team at Spectricity hasten to add that current market alternatives are not robust to multiple sources of illumination. “Current sensors measure the average illumination to try to help with the colour but this doesn’t work well,” he says, speaking of modern sensors that correct through averaging. “We [at Spectricity] are able to make filters so small that we can put a different filter on every single pixel of your camera.”

The technology potentially has the ability to target a wide range of applications. In particular, the company highlights the applications to skin-tone matching. The reason people of colour often don’t feel fully represented by smartphone technology is, in part, because their skin tones are inconsistent across multiple images with different lighting. By enabling better skin tone matching, consumers can feel better represented, but also make better choices about e-commerce, particularly when purchasing cosmetics.

A comparison between a standard red-green-blue (RGB) camera and Spectricity’s multispectral image sensor (Image: Spectricity)

Spectricity also highlights its sensors as useful for spectral measurements, allowing for measurements of other parameters other than simply colour. “We can extract biomarkers,” Borremans says. “We can figure out how much melanin there is in the skin, how much blood volume there is and what the oxygen saturation is.” These metrics could be used for applications such as health and diagnostics, or anti-spoofing, giving more power to the smartphone user.

The team hopes to see these sensors in smartphones as early as 2024, and expect the technology to propagate widely through the industry. Only Google provides a purely computational solution through its Real Tone feature – launched with the Pixel 6 phone in 2021 – which also claims to redress issues of colour balancing and skin tone accuracy in photographs.

Tiny but mighty?

Innovations in smartphone optics are also being made within industry and in academia. One such project pushing the limits is FacetVision, a research and development project from the Fraunhofer Future Foundation exploring next-generation designs in imaging optics. In particular, they look at ways of miniaturising optics for smartphones, focusing on low cost, high-volume production as well as scalability. One target area of importance for FacetVision is the development of thinner, or ‘flat z’ cameras for use in smartphones.

“Usually cameras nowadays stick out of the smartphone and its case,” says Frank Wipperman of the FacetVision project. “These don’t look nice, and they are growing in size year on year.”

The z-height of a camera depends on its resolution and pixel size. For a greater number of pixels, desirable to smartphone manufacturers, and larger pixel size, the camera’s z height will increase. Smartphone manufacturers have been reducing pixel size in a bid to minimise the camera z-height, to prevent protrusion from the phone casing, but this comes at the cost of light collection. To date, pixel sizes have dropped down to 600nm, with Apple’s iPhone 14 having 2.44 microns and the Google Pixel 7 having 1.2 microns in pixel pitch. While pixel sizes might have fallen to the wavelength scale (red light having a wavelength of around 600nm), this isn’t always reflective of the pixel scale in smartphone cameras themselves: pixels can be ‘binned’ meaning multiple pixels are used to form a single virtual pixel, increasing the effective pixel size and dropping the overall resolution of the camera.

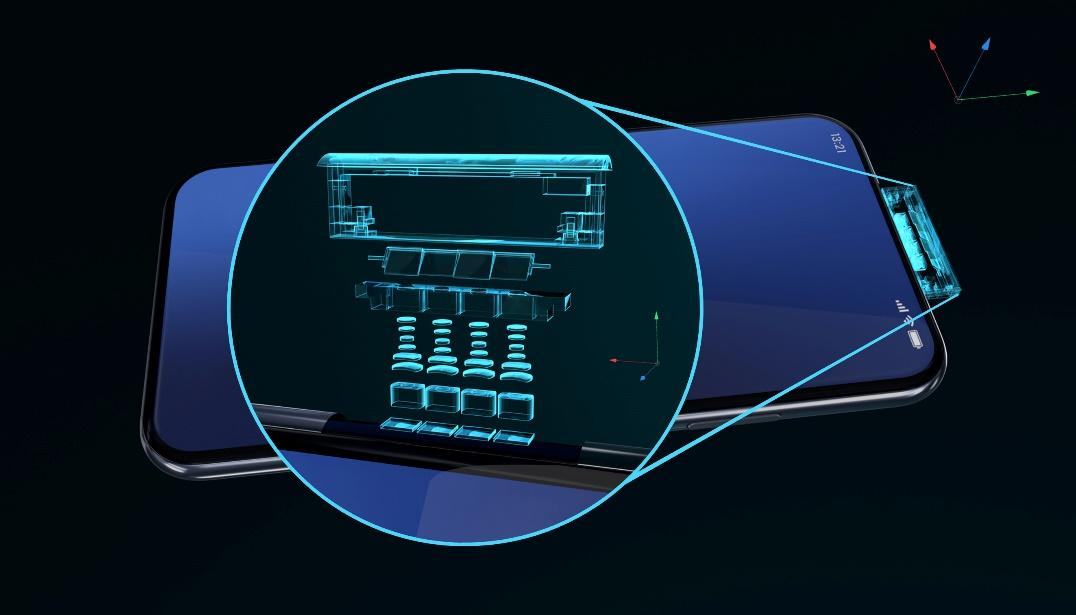

To get around the problem of reducing or increasing the physical pixel number and pixel pitch within a smartphone camera, FacetVision instead targets a different approach to imaging. The company splits the field of view of the camera into four channels, applying software to stitch together the complete images after. By using a smaller field of view, smaller optics can be used and, hence, smaller optical modules.

A visualisation of FacetVision's four-part camera (Image: FacetVision, Fraunhofer Future Foundation)

“It’s like a stereo camera for the four channels,” says Wipperman. “From the raw data of the four channels you can calculate a mapping and then you do the rendering of that image information to produce the final image.”

Through the stitching process, the company can also correct for parallax errors. The team also employs a reflective configuration, reflecting the captured light using a wedged mirror, that allows them to use the same sensor for images taken by both the front and back cameras. This provides a significant reduction in camera size as well as reducing overall costs of the module.

“You can shrink the camera height by 50%,” Wippermans continues. “Do that and the camera, which sticks out nowadays, would be within the casing of the smartphone. It would look much cleaner.”

The team at FacetVision is also addressing issues of autofocus and image stabilisation within smartphones. Currently, image stabilisation in smartphones is done using voice coil actuators, which appear in models as current as Apple’s iPhone 14. These actuators generate waste heat, caused by the high currents required to induce the actuator’s magnetic fields. In FacetVision’s design, the relatively small field of view means that piezo-electric bending actuators could be employed instead, leading to lower currents, less heat generation and reduced energy consumption. In real terms, this means improved battery life when continuously using the camera.

Each aspect of FacetVision technology aims to drive smartphone camera technology forwards, and stitching the project together takes time, collaboration, and resources. But with the increasing consumer demand for more professional cameras, smartphone manufacturers will soon have to look for methods of keeping the optics within the slim smartphone casing, a niche that FacetVision hopes to corner.

The market for smartphone cameras is increasing exponentially, and the desire to meet the needs of numerous customer bases is also demanding more flexibility from manufacturers. But innovation is rife among all sectors of smartphone photography, with many more exciting developments promised in the near future.