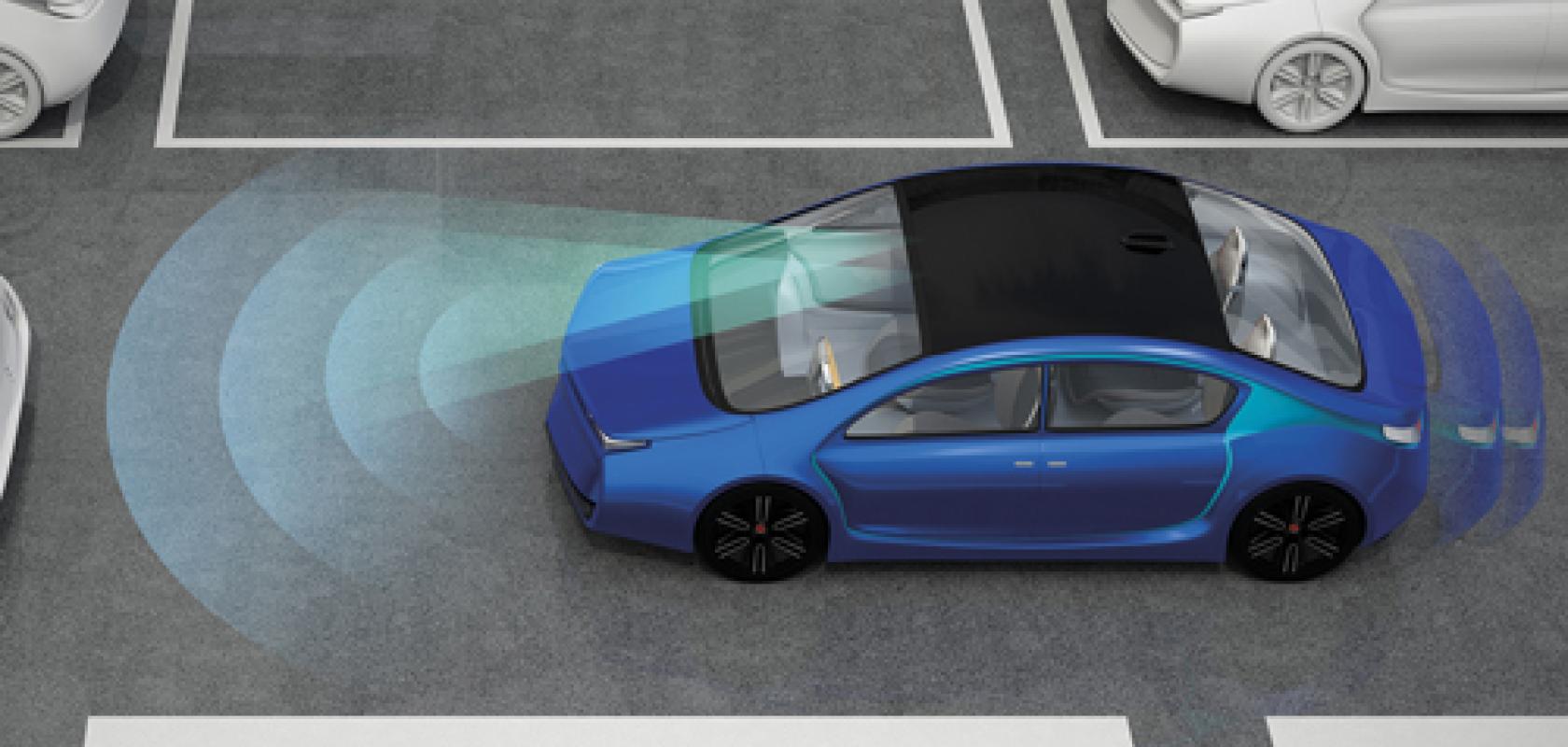

We might not be at the stage where a person can read a book while travelling to their destination, but the autonomous vehicle market is growing at a rapid pace.

Although just one small part of the autonomous vehicle ecosystem, sensors and detectors are a key enabler of advanced driver assistance systems (ADAS) and will play a major role in realising the autonomous driving dream.

Sensor providers are very much taking part in this fast-growing market, and are now faced with the challenge of designing devices that are not only robust and high-performing, like those for military applications, but that have additional space and cost limitations.

Rather than supplying complete systems to car manufacturers, sensor manufacturers will work with OEMs and tier 1 suppliers that will then integrate the sensors into the ADAS devices such as lidar, said Denis Boudreau, product leader of photon detection from Excelitas. ‘We are at the component, sub-system level. We can add intelligence to the components; so, you can add drive electronics to the laser, for example, or read-out electronics to the detectors – but then you need the software behind to process those signals, and that’s where the tier one partners would come into play,’ he explained.

Let sensors take control

The words ‘autonomous’ or ‘driverless’ are often used to describe vehicles with automatic functions, which can sometimes lead to confusion as the terms can refer to cars with varying degrees of automation.

The US Department of Transportation’s National Highway Traffic Safety Administration (NHTSA) has defined five different levels of autonomous driving. This scale runs from zero to five, with zero being a traditional car that has no system intervention, and level five being fully autonomous – the user can just enter the address they’d like to go to, and then relax, or even sleep, while the vehicle drives itself.

‘Personally, I think that these types of systems will first show up in public transit – so I think the volumes will be a bit limited early on,’ said Boudreau. ‘But then, gradually, ADAS will move into cars. People have varying views about timelines – I think 2021 is probably the earliest we will see this happening, and even then this is optimistic for level five. But we will have to see.’

Whether it’s for a more simple function such as assisted parking, or for use on the more advanced autonomous cars being trialled by car giants such as Ford, it’s crucial that the sensors have high reliability, according to Dave Barry, technical sales engineer at Laser Components. ‘Reliability is key; you must have something that’s completely fail-safe. The idea here is to reduce accidents on the road, so we need something that is highly reliable, with extremely long mean time to failure (MTTF) but also highly performing.’

Whereas sensor manufacturers are used to designing highly reliable devices for military and defence applications, it becomes more difficult when there are additional challenges associated with space and cost, according to Boudreau from Excelitas. ‘The first requirement I would say is making the sensors as small as possible so that they can be integrated into the vehicle,’ he said. ‘[Another] one is cost-effectiveness, particularly with the autonomous vehicle application being in the consumer space. If they are going to deploy these systems in large volumes – which they expect to in the future – then cost-effectiveness of the components is key.’

Another aspect is speed, added Barry. Laser Components has recently released a new series of avalanche photodiode arrays – the SAH1L12 series – which the company has designed for applications such as lidar and automatic braking systems in autonomous vehicles.

In automatic braking, for example, the sensor arrays are combined with a pulsed source, typically 850nm, which will output light in nanosecond pulse durations. The light will reflect from objects in the surrounding environment and be received by the photodetector arrays, and the signal will feed back into the control elements of the car so that adjustments can be made based on what’s been observed. So, if the car gets too close to an object, then the brakes should stop automatically.

‘Speed is critical, because you have a pulsed laser diode pulsing at nanoseconds that has to move to the vehicle, be reflected, and then be detected and processed, and then adjustments to the car made,’ said Barry. ‘You can’t afford to have any delays in the system… the quicker you can get all of these elements the better.’

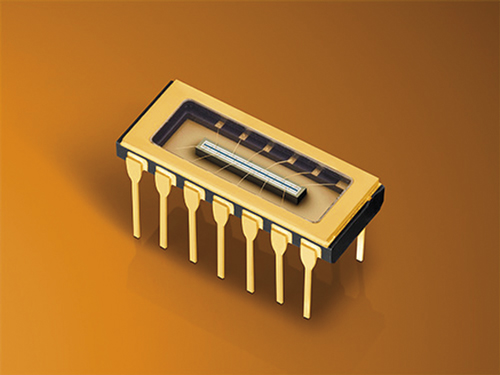

Excelitas has also released a sensor array that has been designed specifically for lidar applications, its 1x4 pulsed laser diode arrays.

According to Excelitas’ Boudreau, more and more, customers are requesting sensor arrays over single pixel detectors. Arrays will become more important for autonomous lidar going forward, he added, especially as lidar systems still need to improve in resolution in order for vehicles to become fully autonomous.

‘For level five systems, the technology needs to evolve – lidar will have become higher in resolution than what’s available today,’ Boudreau said. ‘So, going from single pixel to arrays is definitely a trend that’s already there and I think this will continue on the component front to get the technology up in resolution.’

Laser Components’ SAH1L12 series is a 12-element detector. ‘The advantages of this as opposed to a single element approach are that you drastically reduce the dead space in between elements… So there is a continuous spectral response across the array,’ Barry said. ‘The gap in between these elements in 40µm; if you compare that with having 12 single element devices, aligning with that sort of level is difficult. So, this does offer a nice high resolution alternative.’

Another trend that both Excelitas and Laser Components have picked up on is a move further into the infrared, at 1,550nm. This is largely due to the fact that at this wavelength, higher-power light sources can be used while still being eye safe.

‘The requirements for safety [at 1,550nm] are slightly different – you can push the power of the source a little bit more before you fall into the same category for laser eye safety,’ Barry said. ‘Light at 1,550nm is much more capable of penetrating perspiration in the air. So if it’s a foggy day, for example, 1,550nm is more effective at dealing with that.’

Laser Components' SAH1L12 series

‘So, there a natural progression moving towards these sensors,’ he noted.

However, Excelitas’ Boudreau pointed out that, currently, 1,550nm lasers are less efficient, so more lasers are required to achieve the same power level. In addition, the detectors for this wavelength are more expensive. ‘[The 1,550nm wavelength] has its advantages and disadvantages. But most of the lidar systems today look to design around the 905nm wavelength region,’ Boudreau said.

Future projections

At the moment, it seems as though the most likely way in which fully autonomous cars will exist on the roads is through multi-modality. ‘I think all of the systems being looked at now – the most reliable – use multi-modality. They don’t just use cameras, or lidar, or radar – they’ll use all of the systems together,’ explained Boudreau. ‘The processing system has to collect signals from all of these different modalities and make an intelligent decision based on that.’

But Boudreau added that there is also another possibility, whereby the artificial intelligence (AI) in the system is developed to the point that less imaging and sensing technology is required. ‘There is potential that if you could develop very strong AI, then one can imagine that eventually you could just have one camera, and then all of the processing is done in the software.

‘This is more like a human – we just have two eyes, but our brain makes all of the decisions. If we had a computer that had the processing power of a human, and make decisions like a human, then one camera with that software would be sufficient.’

AI computing company Nvidia has been working on just this. In 2015, the company launched a project, called Dave2, to create a robust system for driving on public roads.

Using the Nvidia DevBox and Torch 7 – a machine learning library – for training, and an Nvidia Drive PX self-driving car computer for processing, the Nvidia team trained a convolutional neural network (CNN) with time-stamped video from a front-facing camera in the car synced with the steering wheel angle applied by the human driver.

The 1x4 pulsed laser diode array from Excelitas

The company collected the majority of the road data in New Jersey, including two-lane roads with and without lane markings, residential streets with parked cars, tunnels and even unpaved pathways. More data was collected in clear, cloudy, foggy, snowy and rainy weather, both day and night.

Using this data, the CNN was trained to steer the same way a human would, and was given a particular view of the road and evaluated in simulation. A simulator took videos from the data-collection vehicle and generated images that approximate what would appear if the CNN were steering the vehicle.

During road tests, the vehicle drove along paved and unpaved roads with and without lane markings, and handled a wide range of weather conditions. As more training data was gathered, performance continually improved.

Nvidia has said its engineering team never explicitly trained the CNN to detect road outlines. Instead, using the human steering wheel angles versus the road as a guide, it began to understand the rules of engagement between vehicle and road.

‘[Deep learning] could be the ultimate end state – it’s very hard to tell at this point. But at the moment, the multi-modality will be the most popular,’ Boudreau concluded.