Photonic systems have for decades provided physicists with a rich playground for exploring quantum phenomena, and more recently they have formed the basis of the first practical implementations of quantum communications and cryptography. But photonics is also set to play a vital role in the emergence of quantum computers that within the next 10 to 15 years are expected to revolutionise tasks that depend on processing power – ranging from financial modelling and drug discovery through to predicting long-term changes in the Earth's climate.

At the heart of a quantum computer are qubits – each one a two-state quantum system such as an electron that can be spin-up or spin-down, or a photon with either vertical or horizontal polarisation. Unlike the bits in a classical computer, however, these quantum elements can exist in both states at the same time. In this superposition a qubit can store more information than a conventional bit, essentially allowing it to perform multiple calculations at the same time.

A single qubit does not offer much advantage over its classical counterpart; the real power comes when more qubits are connected together through entanglement. Adding extra qubits to an entangled network yields an exponential increase in the complexity of operations that can be performed, which would enable a quantum computer to solve problems that are beyond the reach of the most powerful classical computers.

While qubits can be realised in a variety of physical systems, the beauty of photons is that they offer a route to building a quantum computer that operates at room temperature. Other leading contenders, such as technology platforms based on superconducting qubits and trapped ions, must be maintained at ultralow temperatures inside expensive and energy-intensive cryostats. The fragility of the quantum states in these systems also make them extremely sensitive to noise, leading to errors that degrade their overall performance.

‘The energy of a photon is much higher than the energy of background radiation at room temperature, which means that that the quantum information encoded in a photon isn’t disturbed by the environment,’ explained Kris Kaczmarek, a quantum physicist who is now head of product at quantum start-up Orca Computing. ‘Photons are not affected by the decoherence and noise that usually kills quantum computers.’

In 2020 a landmark study by a group of Chinese researchers demonstrated for the first time that a photonics processor can achieve quantum advantage – in other words, it can solve a problem much faster than a classical computer. They built a machine called a boson sampler, essentially a huge interferometer containing a complex network of optical components. This hardware platform sends identical photons through many different paths, causing them to interact with each other along the way, and then measures the state of the photons at the output. According to the researchers, the sampling rate measured in the experiment betters the capabilities of a conventional supercomputer by a factor of 1014.

While undoubtedly impressive, the complexity and size of this experiment doesn't lend itself to developing a practical quantum computer. It does, however, neatly illustrate the single biggest challenge with using photonics to build quantum computers, which is that almost all photonic interactions are governed by probability. ‘Building a useful photonic quantum computer requires a lot of probabilistic operations, each one with a non-unit chance of success,’ explained Kaczmarek. ‘The overall success rate of a large algorithm can become vanishingly small.’

This probabilistic nature of photonic interactions affects each key function in a quantum computer: from generating single photons at the input through to creating the entangled states and measuring the outcome of the quantum calculation. That makes it easy in photonics to implement single-qubit logical gates, but much harder to achieve two-qubit operations in which the state of one qubit is changed based on the state of another one.

The solution in photonics is to exploit multiplexing: to attempt the desired operation many times in the knowledge that occasionally it will succeed. ‘Multiplexing has traditionally been achieved by having lots of optical components performing the same operation in parallel,’ explained Kaczmarek. ‘If you need to produce five photons, say, you need lots of photon sources operating with a low probability to guarantee that between all of them they will generate the five photons you need.’

That need for redundancy explains the size and complexity of the Chinese experiment, but start-up companies around the world are developing more practical solutions to the multiplexing challenge. PsiQuantum, for example, a US-based company that has just raised investor funding totalling $450m, plans to build a large-scale quantum computer by integrating millions of optical components together on a silicon photonics chip. The company has formed a partnership with top-tier semiconductor manufacturer GlobalFoundries to develop and manufacture the devices, and says that photonic circuits for single-photon generation are now being fabricated using proven production processes. More work is needed though to develop silicon photonics chips that are capable of creating entangled states and executing quantum algorithms.

Orca Computing, meanwhile, is taking a less brute-force approach to the multiplexing challenge. ‘We have developed a quantum memory that makes it possible to trap photons and release them when they are needed,’ said Kaczmarek. ‘This eliminates the overhead that is traditionally associated with photonics systems, since it allows us to do the multiplexing in time rather than space.’

This time-based multiplexing only requires a single optical component for each operation, instead attempting the operation on a photon many times and capturing the photon when it succeeds. Trapping and delaying photons inside these quantum memories offers a way to synchronise the successful outcomes from different operations, so that many fewer components are needed to execute a quantum algorithm.

Orca has exploited these quantum memories to build a photonics processor that acts in a similar way to the boson sampler built by the Chinese researchers. ‘We can implement something very similar but with much fewer resources,’ explained Kaczmarek. ‘We just have a single photon source generating a stream of photons, and we use our memory-based processor to make them interact and measure the result.’

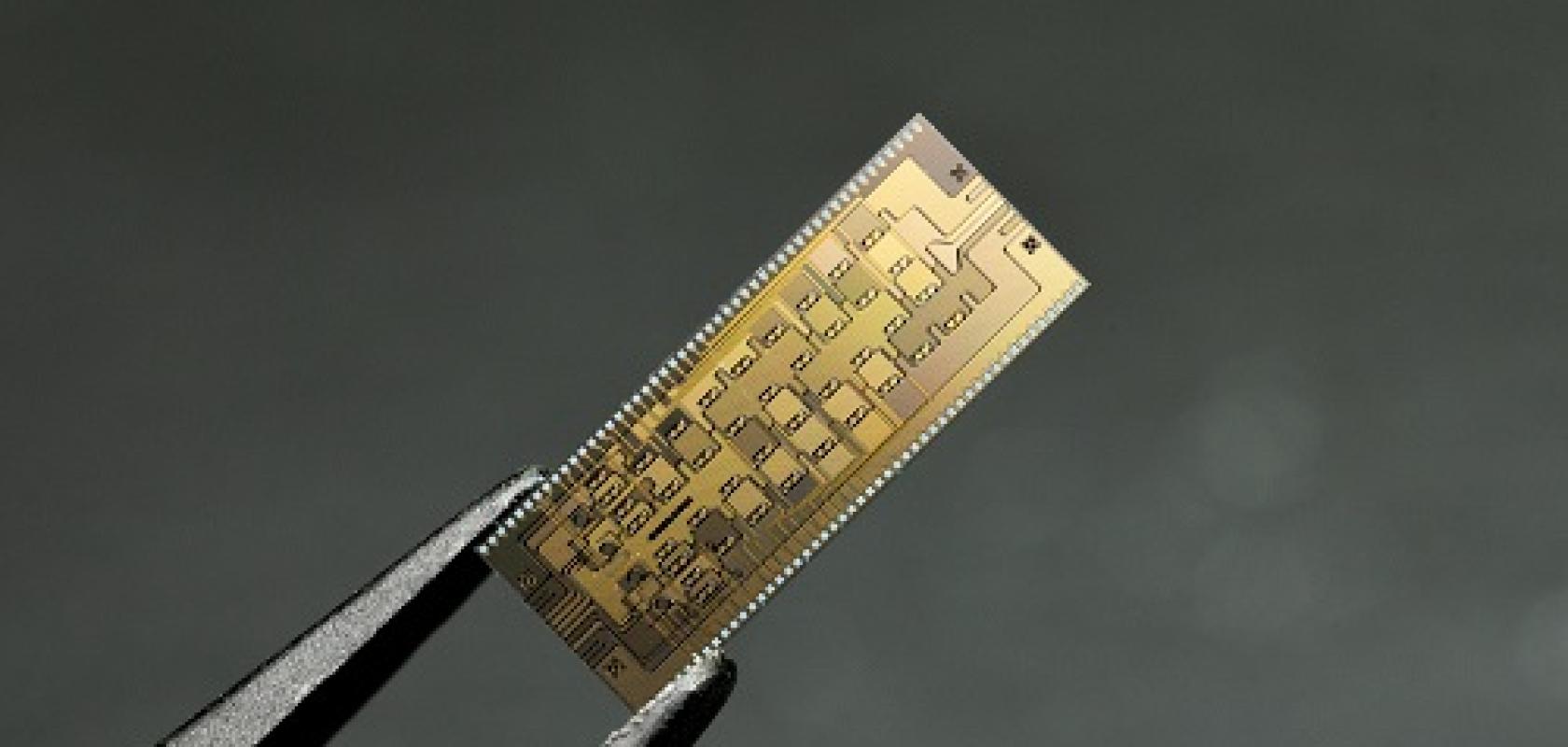

To deliver some near-term quantum advantage, Orca Computing is building hybrid systems that exploit specialised quantum processors to perform certain functions more efficiently than a standard silicon-based processor. Credit: Orca Computing

A boson sampler on its own is not that useful because it can only solve certain types of problems. But Orca realised that this highly specialised quantum processor could be integrated into a classical system to perform specific tasks. ‘Our current quantum processor is not powerful enough on its own to do useful computations, but it can do certain parts of a computation more efficiently than its classical counterpart,’ said Kaczmarek. ‘A hybrid system with a small quantum component inside a larger classical system offers a practical route to outperforming a conventional computer.’

Orca is already working with end users in fields such as logistics, finance and chemistry to develop applications for this hybrid approach. ‘You need a device that can talk efficiently to a classical computer,’ said Kaczmarek. ‘Our system can do that because it's built from telecoms components that are already meant to interface with existing computing infrastructure.’

While this small and specialised quantum processor already offers some benefit for specific applications, Kaczmarek points out that it also provides a useful stepping stone towards large-scale quantum machines that will be able to tackle any type of computational problem. ‘All the components we’re developing for these small-scale quantum processors will eventually feed into our universal computer,’ he said.

Meanwhile, Canadian start-up Xanadu has sought to eliminate some of the inherent uncertainty in light-based systems by replacing single photons with so-called ‘squeezed states’ consisting of superpositions of multiple photons. ‘That gives you access to the full optical mode, not just a two-level qubit,’ explained Zachary Vernon, head of hardware at Xanadu.

One key advantage of these squeezed states is that they are not subject to the same probabilistic rules as single photons. ‘Squeezed states are generated deterministically every time, and they also become entangled in a deterministic way,’ continued Vernon. ‘Squeezing-based protocols are more amenable to scaling because there is less need to build in the overhead needed to compensate for the intrinsic failure rate of single-photon-based systems.’

Xanadu has already built a series of small-scale quantum chips that can be programmed for specific applications via the Cloud. Built on a silicon-nitride platform using industry-standard manufacturing methods, the X-series of chips is available in 8- and 12-qubit versions, with a 24-qubit processor also constructed and a 40-qubit chip now at the fabrication stage.

Squeezed states are generated on the chip by coupling laser pulses to an array of microring resonators, and then they are entangled together by an interferometer consisting of a series of beam splitters and phase shifters. ‘Both the squeezed states and the entangling network can be programmed,’ said Vernon. ‘The quantum algorithm actuates the phase shifters on the chip to implement different gate settings and entangle the squeezed states in a different way.’ The result from the entanglement is measured by superconducting detectors on a separate chip, which Vernon said is the only part of the set-up that needs to be kept cold.

These small quantum chips have again been designed for boson sampling, which could be interesting in its own right for studying the interactions between squeezed states. More importantly, said Vernon, the X-series offers a crucial building block for creating a universal quantum computer that will outperform classical computers.

‘The X-series chips are really a test case for cloud deployment of near-term quantum hardware, and they are far too small to achieve quantum advantage,’ he said. ‘More interesting for us is that highly optimised versions of these X-series devices can be used to synthesise a special type of quantum state that we can use as the qubits in a universal quantum computer.’

Rather than measuring the output from the interferometer, in this scaled up version the entangled squeezed states produced by the X-series would act as an indicator that heralds the formation of so-called GKP states. ‘These GKP states have an intrinsic robustness against errors, in particular against photon loss,’ explained Vernon. ‘You need squeezed states to access these more complicated and error-resistant quantum states, which can then be used as the qubits in a general-purpose quantum computer.’

While GKP quantum states act in a deterministic way, the event that indicates the formation of this complex quantum state doesn't happen every time. ‘No photonic system can evade non-deterministic operation entirely,’ said Vernon. ‘There's a probability associated with detecting that event pattern, and therefore with generating a GKP state.’

In the near term, the main goal for Xanadu is to build an error-corrected module. Vernon said that the single biggest challenge will be to create highly optimised versions of the X-series devices, in particular to reduce photon loss due to material imperfections and the coupling to optical fibre at the output. Another key task will be to fabricate large numbers of identical X8 chips and connect them together in parallel to produce large numbers of GKP qubits. ‘We also need to build the components required to perform gate operations with the GKP qubits, but that's less challenging than building a high-performance X-series device,’ he said.

Kaczmarek agreed that the main barrier to building a universal photonics quantum computer lies in building effective few-qubit quantum machines. ‘The brilliant thing about photonics is that once you can build very good small photonic systems you can easily link them together, which makes it much simpler to scale and make them universal,’ he said. ‘First we need to optimise the performance of the individual components, and then we can start building the computational resources needed for universal computation.’

Modulators get to the heart of quantum photonics

Quantum photonics is the science and technology of light; it relies on the principle of quantum effects. This is an emerging field which is increasingly expanding on all horizons – so much so that it is a part of our daily lives. We are seeing numerous applications across markets and sectors such as quantum gravity sensing, computers, imaging, secure communications and many others.

Today, with the threat of hackers and the need to keep our information safe, the community is aiming to develop quantum communication systems. These secure communications work via quantum key distribution, a method of transmitting information using entangled light in a way which makes any interception of transmissions obvious to the user. Another technology in this field is the quantum random number generator used to protect data. This produces truly random numbers – unlike algorithmic procedures, which merely imitate randomness.

These communication systems use quantum effects to distribute encryption keys. Encryption keys secure sensitive data transmissions, which we experience in our daily lives from bank transactions to e-commerce, defence and security, health records and space satellite communications.

Customer support

Involving sizeable companies as well as start-ups, substantial work is taking place to develop quantum computers and eventually provide quantum computing at our fingertips. In partnership with iXblue, Laser Components supports customers involved in this field with electro optic modulators (EOMs).

This technology harnesses the phenomena of quantum mechanics to deliver a huge leap forward in computation, solving certain problems that today’s most powerful supercomputers cannot. The need for quantum computers is inevitable. This will be a revolution; such technology will enable us to solve complex algorithms within finance, drug discovery, logistics and much more. It could reduce current times of hours, weeks or even years to a fraction of a second or minutes. This reduction of calculation time is chiefly due to the fact that an ordinary binary bit with two states, 0 or 1, will now have both simultaneously. Quantum computing will drastically change our world for good.

Quantum imaging, which is related to the field of quantum optics, exploits this two-state phenomenon to create a high-resolution image beyond the scope of classical optics. This can be achieved using different methods.

Quantum imaging techniques

The first method uses scattered light from a free-electron laser. This approach converts the light to quasi-monochromatic pseudo-thermal light. Another method known as interaction-free imaging is used to locate an object without absorbing photons. The final method of quantum imaging is known as ghost imaging. This process uses a photon pair to define an image. The image is created by correlations between the two photons; the stronger the correlations, the greater the resolution.

Quantum imaging techniques allow for improving the detection of faint objects and amplified images, as well as the accurate position of lasers. Today, quantum imaging (mostly ghost imaging) is studied and tested for military and medical use.

The military aims to use ghost imaging to detect enemies and objects in situations where the naked eye and traditional cameras fail. For example, if an enemy or object is hidden in a cloud of smoke or dust, ghost imaging can help to locate it. In the medical field, imaging is used to increase the accuracy and lessen the amount of radiation a patient is exposed to during x-rays.

Ghost imaging could allow doctors to look at a part of the human body without having direct contact, thereby lowering the amount of direct radiation which reaches the patient. As in military applications, it is used to look at concealed objects such as bones and organs, using light with beneficial properties.

Other applications using the quantum mechanism – such as quantum entanglement, quantum interference and quantum state iXblue squeezing – allow us to detect the undetectable thanks to quantum sensing. Laser Components, partnering with iXblue, has assisted several customers with dedicated EOMs for sensing applications, and continues to do so. With such improvements on precision, we will soon be able to have accurate navigation underwater, to sense changes in gravity that reveal potential volcanic activity, climate change and earthquakes, to monitor brain activity on the go and even to see round corners.

In our everyday lives, quantum sensing will secure navigation and show us what is beneath our feet. This is a tremendous breakthrough for oil and gas industries that need to know where to dig to collect raw materials. This will be useful as well for civil engineering to enhance their constructions by saving time and money.

Quantum photonics will be, and already is, a big turning point in the years to come. iXblue and Laser Components are very proud to be part of this game changing technology.

Further information www.lasercomponents.com/uk/product/fiber-opticalmodulators