In March 2021, Honda unveiled a partially autonomous car that it says can take over from a human driver in traffic jams. This special edition of the Honda Legend uses lidar laser illumination, alongside radar and cameras, to navigate at low speeds. Lidar measures the distance to nearby objects by calculating the round-trip time of a laser beam, building up a 3D map of the surroundings. Though automotive lidar is only a small fraction of the total lidar market at the moment, analysts from Yolé Devéloppment, which has been performing market research on lidar for more than five years, predict that by 2026 it could be worth $2.3bn, comprising more than half the market share.

But most of us won’t be taking our eyes off the road anytime soon. The Honda Legend has a retail price of $102,000 and only 100 have been manufactured. The challenge for lidar companies, whether they’re making systems for cars, trucks or infrastructure for smart cities, is not just to make a machine that can compete with the human eye, but to make something that can be produced at scale, and at a cost that is comparable to the rest of the product.

Peter Stern is CEO of Voyant Photonics, a lidar start-up founded in 2018. He thinks the existing technology is reaching its lower limits in terms of cost. ‘When you take apart those devices and [analyse] them, you’ll see… it’s going to be very hard to take a $5,000 device today and make it a $100 device within a few years,’ he said.

Voyant is one of a small minority of companies taking a chance on silicon photonics, an approach that puts lidar on microchips. The firm recently secured $15.4m in funding to develop its technology commercially and is building prototypes and development kits for potential customers.

Voyant, along with other chip-scale lidar companies, is grappling with a lidar system called frequency-modulated continuous-wave (FMCW), which lends itself better to chip integration. Just over a year ago, FMCW lidar company Aeva announced a collaboration with automotive supplier Denso, but cars using this technology have yet to appear on the roads. FMCW lidar has generated a lot of excitement, but there are considerable technical challenges to bringing it to market.

The lidar holy grail

Another company developing chip-scale FMCW lidar is Silicon-Valley based SiLC. SiLC’s VP of business development and marketing, Ralf Muenster, sees a future where lidar is used not only in self-driving cars, but also in delivery robots, athlete analysis and smart agriculture. Speaking shortly after the Consumer Electronics Show in Las Vegas, he even speculated that lidar sensors could be used in casino security cameras to detect fights breaking out. At CES, SiLC showcased its Eyeonic vision sensor, which the firm says is the world’s first commercially available chip-integrated FMCW lidar system.

Two decades before the company was founded, SiLC’s CEO Mehdi Asghari was the VP of research and development at Bookham, which pioneered silicon circuits powered by photons instead of electrons. Muenster said that SiLC’s waveguide technology has allowed the firm to shrink what would otherwise require metres of fibre for FMCW down onto a single chip. One of the big advantages of silicon photonics is that it uses existing semiconductor fabrication techniques and all the billions of dollars and many decades of research and investment that has gone into it.

However, when SiLC started integrating lidar technology onto silicon photonic chips, they ran into a problem. The lidar used by Honda, Google and other autonomous vehicle companies is time-of-flight, which illuminates the surroundings with short laser pulses. However, according to Muenster, the peak power of the time-of-flight laser ‘didn’t lend itself to integration on a single chip’. They needed an alternative, which is where FMCW comes in.

- Lumentum partners with Hesai to develop solid-state lidar

- Lidar sheds light on ocean health - Andy Extance finds the pains and gains of laser light’s different behaviour in water for oceanographers using lidar

In FMCW lidar, the laser emits continuously, and the beam is split into two parts. Half the light is emitted, and the rest is kept on the chip and mixed with the returning signal. When light hits the photodetector, it’s the frequency modulation of FMCW that generates the round-trip time. As it leaves the laser, the light is chirped by sweeping the frequency up and down, so that a plot of the laser frequency against time is a periodic waveform with its own frequency and amplitude. It’s the phase shift of this chirped waveform that is used to work out how far the returning light has travelled. Because FMCW uses the phase and frequency of the light, the sensor can filter out sunlight and signals from other lidars. It also means it can operate at lower peak power than time-of-flight, which makes it a good candidate to implement in semiconductor waveguides.

Muenster believes this interference immunity is just one of many advantages of FMCW, describing it as the holy grail of 3D sensing. Another powerful property of FMCW is that it can calculate instantaneous velocity, which could be a game changer for self-driving cars. A single photon reflected off a moving object will exhibit a frequency shift proportional to the object’s velocity, a phenomenon called the Doppler effect. As well as velocity, SiLC’s point clouds also generate information about the polarisation of the returned photons, which they think could be used to identify icy roads, or, in smart agriculture, to check whether crops need to be watered.

Muenster also thinks that FMCW can beat time-of-flight for precision. He described what he calls an ‘alignment problem’ with time-of-flight, where the beginning and end of even a short, three nanosecond pulse spreads out over a few feet, creating uncertainty in the position of an object. Muenster said the Eyeonic vision sensor can go down to a millimetre-level of precision at a range of 30m, and that, typically, time-of-flight gives centimetre-level precision.

Another advantage of FMCW is that the returning signal gets amplified when it is mixed with the local oscillator, so costly avalanche photodetectors can be swapped for less sensitive PIN diodes.

While he also discussed the high range, low noise potential of FMCW, Stern thinks that even if FMCW lidar can’t beat time-of-flight for performance, the low cost and scalability of silicon photonics make it the superior technology. He likens it to vacuum tubes versus transistors; vacuum tubes are faster than transistors, but they’re only used in very specialised applications because they’re expensive and bulky. Stern thinks that FMCW will replace time-of-flight because it enables silicon photonic lidar, saying FMCW is effective enough, and that coupled with it being low-cost, ‘it will be unbeatable’.

Commercialisation challenges

Intel is also looking at silicon photonic lidar, saying it wants to put autonomous cars on the roads by 2025. But not everyone thinks it’s time to switch. Yolé estimates that of the 80 or so lidar companies operating, about 80 per cent are still using time-of-flight.

One challenge for FMCW is that it requires a very stable laser. In time-of-flight, increasing the laser power increases the sensing range, but because FMCW uses the phase and frequency of photons to measure distance, the coherence length of the laser sets a maximum sensing distance. Single frequency operation can be achieved using gratings, which select a single laser mode, though this adds complexity and cost to the lidar compared to pulsed operation. Balance photodetectors, which mix the local and returning signal and extract the phase and frequency, also entail additional hardware. And while the peak power might be lower than in time-of-flight, the laser still needs an average power of a few hundred milliwatts to overcome losses in the silicon waveguides.

The light emitted by the laser on an FMCW chip is also generally a different wavelength to time-of-flight. Light at 905nm is generally used in time-of-flight because the lasers are widespread and cost-effective, but they’re not compatible with silicon waveguides. Instead, FMCW generally uses 1,550nm, which comes with its own set of strengths and weaknesses. An advantage of 1,550nm is that it’s eye-safe at higher power levels than 905nm, which could make FMCW suitable for longer range applications. On the other hand, while 905nm light is reflected by water, 1,550nm is absorbed. This means the power of an FMCW lidar must be ramped up in rain or fog, though Muenster thinks this is preferable to having the light scattered before it can reach nearby objects.

Logistics is one area where chip-scale lidar could find a use. Credit: shutterstock.com

While silicon chips are cheaper than discrete FMCW components, photons are trickier to manipulate on a tiny scale than electrons. Stern likens designing a photonic chip to building a rocket, where the shape of every component must be painstakingly designed for aerodynamics and heat dissipation. Describing the challenge of taking silicon photonics from the lab to the foundry, he said: ‘Anybody on paper can design a silicon photonic lidar system. They can run 50 wafers, test them all with three grad students and then find the one chip that works. Obviously, that’s not going to work for a commercial entity.’

When a large batch of chips is produced in a CMOS foundry, their properties will always vary slightly; these effects are well understood for electronic circuits but not for photonics. Stern said that every time Voyant has sent a photonic circuit for manufacture, they’ve observed photonic effects the foundry hadn’t seen before.

Solid-state lidar

One company that’s sticking with time-of-flight for now is Seattle-based Lumotive, which was founded in 2018 and counts Bill Gates among its investors. Lumotive’s focus is on solid-state lidar, meaning its system steers the laser beam without moving parts. Gleb Akselrod, Lumotive’s founder, said the firm is ‘fans of all kinds of lidar’. However, its M30 system, which was launched at CES, is time-of-flight, and Akselrod thinks the company’s products have advantages over silicon photonic approaches.

Lumotive gets around the problem of silicon waveguide losses by doing away with waveguides entirely. The company has patented a mirrored metasurface; a flat array of liquid crystal pixels and nanoantennas that can tune the reflection angle without moving the laser.

Akselrod pointed out that FMCW lidars typically use additional hardware for beam steering, meaning they aren’t yet a single-chip solution. Optical phased arrays (OPAs) are a promising silicon photonics-based beam steering method, but reducing energy losses, steering the beam in 2D and increasing the detection range are practical challenges to implementing them outside a laboratory.

Lumotive’s technology is compatible with FMCW and it has FMCW partners, but Akselrod said: ‘Frankly, there are just more applications... outside of automotive for time-of-flight lidar.’

But he also sees the two technologies as complementary. He thinks the high precision, long range but high costs and complexity of FMCW make it suitable for a single lidar that works alongside lower-cost, high field-of-view, medium-range, time-of-flight lidars.

Outlook for FMCW

Pierrick Boulay is an analyst from Yolé, and while he foresees a possibility that FMCW could eventually replace time-of-flight, he doesn’t see automotive FMCW hitting the shelves before 2025. Boulay said that the long development cycle puts FMCW companies off automotive lidar in the short term. SiLC has partnerships with OEMs and tier one suppliers, alongside industrial applications, but Voyant is focusing on industry. Stern sees this as an opportunity, saying ‘right now, our target markets are things like industrial safety, industrial robotics, logistics – things where they don’t use lidar now because of the cost.’

The first time most of us take our hands off the wheel, it probably won’t be an FMCW lidar taking charge, but silicon photonics is here to stay, and it might lead to lidar popping up in unexpected places.

Sponsored content

Calibrating automotive lidar and ADAS systems using diffuse reflectance targets

This case study will explain how diffuse reflectance targets are employed in the calibration and testing of machine vision systems, image sensors, hyperspectral imaging cameras, remote sensing devices, and increasingly for the sensors used in lidar and ADAS autonomous vehicle systems.

Diffuse reflectance is the property whereby a material reflects with equal radiance (or brightness) in all directions, regardless of the direction of illumination or the direction of view. This allows the lidar and ADAS sensors to be tested under ‘real world’ conditions.

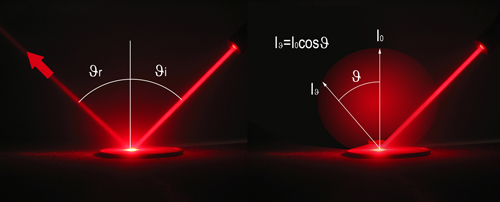

In elementary physics, we are taught to think of reflectance in terms of specular or glossy surfaces, where the angle of incidence equals the angle of reflectance and rays of light are well controlled. In real life, very few surfaces are truly specular. Most ‘glossy’ surfaces will exhibit a small degree of near-specular reflectance, and we refer to this near-specular reflected (or transmitted) light as haze.

The geometric opposite of a specular reflector is a diffuse reflector, a material that reflects (or transmits) light equally in all directions, and one that appears equally bright to an observer, regardless of the angle from which they view it. These properties describe a Lambertian reflector, named after the 18th century Swiss polymath Johann Lambert, who defined the concept of a perfect diffuse reflector in his 1760 book, ‘Photometria’.

Figure 1: Specular reflectance (left) versus diffuse reflectance (right). Credit: Pro-Lite

A material that exhibits Lambertian reflectance is one which is a perfectly matte, or diffusely reflecting, and one which obeys Lambert’s Cosine Law. The cosine law tells us that for the case of reflected intensity (being the flux per unit solid angle), the magnitude of the light varies with the cosine of the angle that the surface is viewed from, compared to the surface normal. If instead we consider the reflected luminance (this being the flux per unit angle per unit area), the surface remains equally bright (same luminance) regardless of the direction of view or direction of illumination.

This property is exploited when testing the performance of lidar and ADAS sensors. As with the human vision system, what counts with camera sensors is the luminance of light reflected from (or transmitted through or emitted from) a surface. The constant brightness of a diffusely reflecting surface ensures that the sensor receives a stable level of light, regardless of the angle of view or illumination, which in turn greatly simplifies the calibration of camera systems.

Pro-Lite serves customers working with lidar and ADAS sensors with certified targets of diffuse reflectance made by our partners at Labsphere (USA) and SphereOptics (Germany). Labsphere’s Spectralon and Permaflect targets, as well as the Zenith and Zenith Lite targets made by SphereOptics, provide near-perfect diffuse reflectance in the 250-2,500nm spectral range, and in particular at the critical NIR wavelengths used with lidar and ADAS systems.

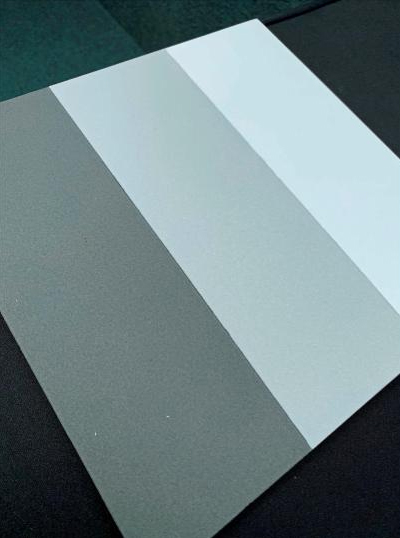

Spectralon and Zenith diffuse reflectance targets are based upon proprietary sintered PTFE. In their natural (white) state, both materials provide diffuse reflectance over a 2pi steradian field with a reflectance factor of up to 0.99. By doping the PTFE, grey-scale reflectance targets can be produced in levels from 2 per cent. Labsphere’s Permaflect is a coating rather than a bulk reflector, but it also possesses near-perfect Lambertian reflectance in the UV-VIS-NIR and can also be offered both in white and various shades of grey.

Figure 2: Labsphere Spectralon diffuse reflectance contrast target. Credit: Pro-Lite

For satellite ground-truthing experiments and for field trials of lidar and ADAS systems, the reflectance targets used need to be of a relatively large area. To that end, both Labsphere and SphereOptics targets are produced in standard sizes from a few cm up to 1m by 1.5m (1.2m by 2.4m for Permaflect) avoiding any seams (or gaps) between panels, which could lead to erroneous measurement data were you to assemble your own large area target panel from smaller tiles. Larger targets are needed in lidar and ADAS applications to reflect a representative number of points across the target surface over long-range test distances of several hundred metres.

Permaflect is a spray coating, which allows it to be applied to large areas or 3D shapes, allowing it to simulate real-world objects. Few real-world objects are flat like a target panel, so Permaflect-coated objects allow for repeatable, near-Lambertian reflectance levels that can be applied, for example, to a mannequin to simulate a pedestrian.

All Labsphere and SphereOptics targets are available with certified calibrations, including reflectance at specified wavelengths, full spectral reflectance traceable to NIST and/or PTB, reflectance uniformity over the target surface and BRDF (bidirectional reflectance distribution function, a standard measure of how Lambertian the surface reflectance is). Both Labsphere and SphereOptics maintain calibration laboratories that are accredited to ISO 17025 for reflectance measurements.

Further information: www.pro-lite.co.uk