Early efforts to map topographical features involved measuring tapes, compasses and aneroid barometers. But then came ‘light detection and ranging technology,’ or lidar, and a new era of geospatial surveying capabilities.

Meteorologists used this new technology in the 1960s to map cloud patterns from aircraft. Then, in 1971, lidar went into space to map the moon’s surface during the Apollo 15 mission. Now, lidar gives new perspectives on landslides on Terra Firma, sets the stage for autonomous vehicles, and is used to investigate undersea topography. Meanwhile, meteorologists are continuing to develop techniques that will eventually provide a satellite’s view of wind speed over the entire globe.

While the basic concept of lidar is simple – illuminating a target with laser light and measuring how long it takes for the reflected signal to return to the source – this technology is evolving to address problems never envisioned by the original developers.

Lenses for landslides

In 2014, a mudslide south of the British Columbia border in Oso, Washington State claimed the lives of 43 people. Weeks after the landslide, University of Washington geomorphologist David Montgomery became aware of data from a 2003 airborne lidar survey collected by the Puget Sound Lidar Consortium. An additional survey detailing the slopes of the major landslides in 2006 showed previous slides and erosion chipping away at a shelf that had long buttressed the upper slope and the plateau near Oso. These new lidar images showed in striking detail that older and very large landslides exist elsewhere in the region in and around Oso.

‘[Lidar] is like a brand new pair of glasses,’ said Montgomery. ‘The level of resolution we can get relative to what we had in old topography maps is like night and day. If you look at old hazard maps for that area, the nature of previous existing landslides doesn’t pop out, but you look at lidar terrain maps and go, Oh! This has happened before.’

Lidar technology motivated efforts to explore the age of old landslides, and their frequency of occurrence. State agencies contracted surveyors to collect lidar data as soon as possible at the 2014 landslide site, which Montgomery and colleagues then used to develop a method for determining the age of these landslides and, therefore, a metric for landslide risk. Their results were published this year in the journal Geology.

Commercial airborne lidar systems have been available for two decades. The simple ‘linear’ beam systems offer high power but low sensitivity. For the new Washington surveys, lidar systems mounted in Cessna aircraft surveyed the landslide area from 900 metres altitude. The bidirectional or polygonal scanning mirror system measures one point on the ground for each firing of the near-infrared 21cm-diameter laser beam, with a sensor set to acquire eight points per square metre. GPS units continuously record the aircraft’s position and altitude to define the laser point position accurately and continuously, and new classification algorithms can distinguish ground vegetation, buildings, and water.

While Montgomery was thrilled when lidar came into use, ‘for students now, this is a new normal. They don’t know what you’d do without it,’ he remarked.

Truly 3D

New lidar technology also contributes to many day-to-day activities. The growth of cities has brought about a corresponding need to refine routes that reduce travel time for both emergency and civilian vehicles. And refined navigational data is necessary for the successful deployment of newly-developed autonomous vehicle navigation systems. Old estimates of the effect of sea level rise and flooding are being refined, and power companies are seeking better ways to manage vegetation encroachment on their distribution networks.

Improved data acquisition speed and spatial resolution lets lidar play a role in these and other fields. Much of this new technology is not ‘new’, but has been available to the defence industry for 15 years. It was unveiled commercially in 2015 with ‘Geiger-mode’ lidar offering the most accurate elevation data available. When the US government declassified key camera components, Harris Corporation began modifying the military system for commercial use.

‘The Geiger-mode system is like a camera. It’s a paradigm shift in both efficiency and data quality,’ commented Mark Romano, Harris’s senior product manager.

An airborne circular scanner illuminates a large area of the ground. Returning light reaches all the pixels within the photodetector’s 32 x 128 array, resulting in a 3D image of elevation. The system detects objects on both the fore and aft arcs of the scanned circle, with a second flight over the area providing a total of four angles from which to collect data.

The low-power, high-sensitivity Geiger-mode lidar was developed in the classified environment of MIT’s Lincoln Laboratory. A silicon backplane camera was designed to aggregate millions of 3D data frames resulting in a photograph-like image. With high resolution and uniform imagery, feature extraction becomes easier. This method was soon able to acquire the vast data sets from which to produce the desired resolution.

‘With a linear system, what you collect is what you get. With high-sensitivity Geiger mode, we oversample the scene. We don’t take a measurement and just use it, like you would in a high power [linear] system,’ said Romano.

Harris designed the system for topographic applications using a standard 1,064nm (infrared) wavelength laser that produces optimal reflection in natural environments. ‘Now that the technology’s declassified, we can build the silicon camera for any wavelength,’ noted Romano. But there has to be a business case – one such possible case is undersea mapping.

Peering through water

Undersea mapping is already here, with bathymetric lidar offering a new way to study coastal and deep sea topography far beneath the waves.

The US Army Corps of Engineers (USACE) collects lidar data along the coastline for government agencies responsible for monitoring aquatic vegetation, flood mitigation, sediment transport and related issues concerning coastal communities. As with landslide hazard maps, regular surveys can show how benthic and shoreline habitats change over time.

A visible spectrum laser (490nm in the blue open ocean; and 532nm in green coastal areas) is necessary to see through a column of water. Laser pulses shorter than those used above ground enhance the accuracy with which newer bathymetric lidar systems can detect the bottom, resulting in better vertical measurements through light-attenuating, coastal water environments. In addition, airplane flight passes need to be lower and slower for bathymetric lidar systems, to ensure they can detect the return signal.

‘The photons from your laser will be absorbed and scattered by the water. Your signal is from the photons coming back to your receiver after they hit the sea floor. The more photons you can get to the receiver, the better your chance of detecting [the signal],’ explained Kirk Waters, scientist at the US National Oceanic and Atmospheric Administration (NOAA). In order to differentiate signal from noise, transmitting more power into the water increases the depth that can be detected for a given water clarity and receiver.

Another user of this technology is USACE’s Jennifer Wozencraft, who notes that improvements in optical filters further reduce noise, and systems with larger field-of-view receivers collect more light from each laser shot. ‘Sensors that have a large field of view and low noise characteristics are letting us survey in areas that we couldn’t before,’ she said, speaking in her role as project manager at the National Coastal Mapping Programme.

Today’s smaller, less expensive components can be flown in the same aircraft for topographic and bathymetric surveys, thus allowing mapping of an entire coastline – from sand dunes out to shallow water – all in a single pass. With two iterations of the US coastal mapping project complete, Wozencraft and colleagues can quantify changes in sediment volumes and provide better data with which to evaluate complex geomorphological models.

Work towards realising the potential benefits of bathymetric lidar continues. Airborne systems using broad-beam, high-power lasers can only reach 60m water depth (some low-power versions only reach 10m). Looking deeper requires scanning from within the water itself.

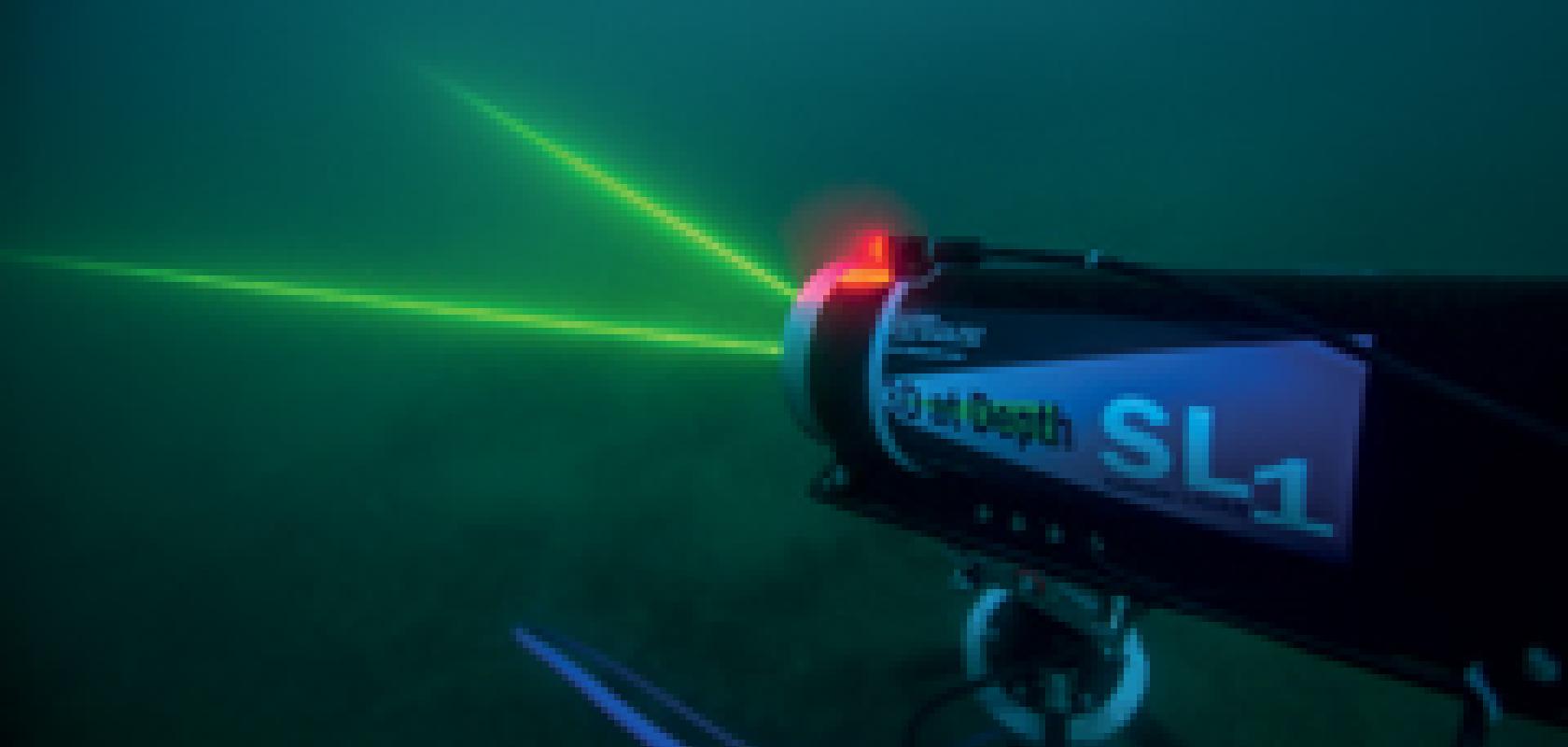

While several companies offer subsea 3D laser systems, many are triangulation- or camera-based, so have a shorter range and are more sensitive to background light and other noise. 3D at Depth, based in Boulder, Colorado, designs and builds lidar scanners for integration with remotely operated vehicles, autonomously operated vehicles, and tripods on the sea floor.

‘As with most laser systems, the recent advances in laser diodes and solid state lasers were important for our [lidar] technology,’ commented managing director Carl Embry. One challenge to overcome was designing a system that could withstand the harsh sea environment. Recent advances in optical coatings have helped increase the longevity of their systems. Also, motion sensors calibrate pitch and roll to create real world reference frames, thus overcoming the struggle to level a sea floor sensor.

3D at Depth supports a wide range of industries and applications, such as: reducing time between initial surveys and the start of extraction in the oil and gas industry; investigating sea life down to 3,000m off the Californian coast; preserving a portion of the submerged USS Arizona in Pearl Harbour, Hawaii; and improving water allocations and conservation.

And, the applications are only expected to grow as new techniques and hardware become available.

How the wind blows

Not too long ago, wind speed and direction were measured just twice a day at selected locations around the globe using helium filled balloons that were assumed to move with the wind. However, lidar can also be used to measure wind velocity, and scientists are building on existing technology to prepare an Earth-orbiting lidar system that will measure winds over the entire planet.

Atmospheric applications of lidar use outgoing laser pulses that return via scattering from small particles suspended in the atmosphere – these aerosols are assumed to move with the wind. The return signal gives a measure of the distance and speed of the particles and hence, the wind speed, relative to the lidar. These systems measure wind speed along radial lines from the lidar to the particles as determined from the Doppler frequency shift of backscattered light.

Doppler lidar comes in two flavours. Direct-detection systems measure light intensity to calculate Doppler shift – and require a relatively strong signal – whereas coherent-detection lidar systems directly estimate frequencies, requiring a weaker signal and less energy than the direct-detection system.

While coherent detection measures light scattered off aerosol particles, direct-detection measures light scattered off individual molecules. This results in a significant increase in measurement coverage, simply because of the fact that, while aerosol concentrations can be very low in the atmosphere, molecules are found everywhere. As a result, direct-detection always produces measurements, even in the upper reaches of the atmosphere. Conversely, molecules are much lighter than aerosol particles and have much higher random velocities (Brownian motion), producing a much wider backscatter signal spectrum that requires a stronger signal for accurate wind estimation.

NASA’s Langley Research Centre is developing coherent-detection aerosol pulsed Doppler lidar, with parameters tuned to match requirements of missions to outer space. NASA is designing it’s Doppler Aerosol WiNd lidar (DAWN) to work in tandem with a molecular wind lidar. DAWN could, in the future, complement the European Space Agency’s Atmospheric Dynamics Mission Aeolus satellite. With a launch date in 2017, ESA’s ‘Aladin’ instrument will measure winds using a direct detection system. A future operational mission might combine Aladin with the DAWN aerosol lidar from NASA.

‘We envision the combination of the two to cover the atmosphere, from top to surface. That would share the cost. Also, with two systems you have potential of intercomparison and some redundancy,’ said NASA scientist Michael Kavaya.

The large pulse energy of DAWN’s two micron wavelength laser uses a Ho:Tm:LuLiF lasing crystal developed by NASA, and a 15cm beam-expanding telescope for flights on board a NASA DC-8 aircraft. For the space mission, the team plans to deploy a 70cm (aperture) telescope to counteract range losses. ‘We’re already flying a laser with the pulse energy and repetition rate that we envision necessary for a space mission,’ added Kavaya.

While DAWN’s primary purpose is to enhance the capabilities of a future weather satellite, it is already providing useful information back on the ground. DAWN measured winds in NASA’s 2010 Genesis and Rapid Intensification Processes campaign to study tropical storms. Moreover, in 2015, DAWN flew on the NASA Polar Winds campaign to study polar warming as well as to determine the feasibility of future calibration/validation with the ESA’s Aladin instrument. These missions offer insights into the hardware’s sensitivity to temperature and vibrations, and atmospheric conditions.

Where next?

The future of lidar offers the opportunity for automatically extracting critical information from data sets; fusing active and passive sensors for characterising environmental resources; processing data in real time; and mobilising instruments in unmanned aerial systems. Further developments will bring the most up-to-date mapping capabilities to the most challenging environments.