In 2018 I saw a Carl Zeiss technology fellow describe futuristic augmented reality (AR) glasses through which virtual elements could be seen in the real-world.

To demonstrate the technology, a video was shown in which hundreds of schoolchildren wearing AR glasses watched in awe as a virtual whale breached the floor of their gymnasium.

While clearly showing the potential of future AR technology, the video also revealed the extensive shopping list of innovations required before such a reality could be realised. The glasses would have to offer a large field of view, high contrast and high resolution to allow everyone in the audience to view the same scene clearly.

The virtual elements would also have to be presented at such a brightness so as to occlude real objects and daylight behind them.

The glasses themselves, in addition to being compact and unobtrusive, would also need to have a long battery life and use real-time data transfer to communicate with other devices in the vicinity, so as to allow each spectator to view the same scene from different angles.

At the time, the Carl Zeiss employee remarked that producing such advanced, unobtrusive AR glasses could not yet be achieved, and that developing them would be a huge undertaking – not only in terms of photonics innovation, but also in terms of the computational power and data communication speeds required.

This year however, at SPIE AR | VR | MR, held alongside Photonics West in San Francisco, I was shown a pair of AR glasses that, while not quite being the finished article, demonstrated that many of the photonics challenges highlighted five years ago have now been addressed.

Seeing is believing

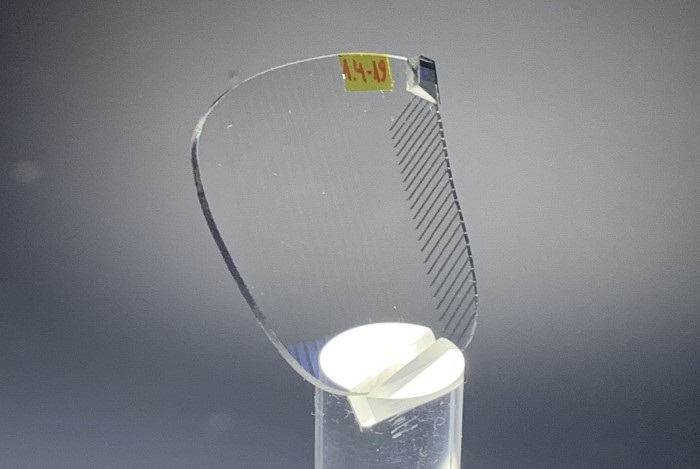

The glasses were made by Lumus, an Israeli manufacturer of reflective waveguides that had recently debuted its new “Z-Lens” 2D waveguide architecture at CES in Las Vegas. The new architecture features an optical engine 50% smaller than its predecessor, “Maximus”, and is promised to enable the development of smaller, lighter AR eyeglasses with seamless Rx (prescription) integration. It delivers 2k x 2k resolution and uniform, vibrant colour across a 50-degree field of view – with a roadmap to 80 degrees – at an outdoor-compatible brightness of 3,000 nits/ Watt. This means detailed objects and white pages of text (down to eight point font size) could be viewed clearly in broad daylight.

However, as I found out at the show, reading stats from a press release can only tell you so much – seeing is, as they say, believing. In this case ‘they’ was David Goldman, Lumus’ VP of Marketing, who handed me the firm’s latest demonstrator. A pair of temporary lenses was attached to the demonstrator to match my prescription, with Goldman explaining that Lumus has recently developed the technology to bond prescription lenses directly to its waveguides without distorting the image quality.

The Lumus demo featured a high-resolution airship that could be viewed in broad daylight (Image: Lumus)

Putting on the smart glasses, I was greeted with a colourful, virtual, steampunk-esque airship floating across my field of view. The airship was shown in incredible resolution, to the point where I was able to look inside its windows and see the cabins and contents within. In addition, as highlighted in the shopping list of innovations five years earlier, the airship occluded everything behind it in the well-lit exhibition hall we were standing in. I was told that none of what I was witnessing could be seen by those around me, meaning that Lumus has successfully prevented “light leakage” – where the image being viewed can be seen externally on the lenses. This will ensure the privacy of future users when viewing, for example, private documents in crowded areas.

It’s worth noting that the smart glasses had only been designed to demonstrate Lumus’ waveguide technology. It was therefore not a demonstration of current AR technologies’ level of computing power, data transmission, or usage of optical sensors and SLAM algorithms to position virtual elements relevant to real-world surroundings. As noted by Goldman, however, the demonstrator does show that “optics will no longer be a bottleneck to realising compact, futuristic AR systems”. It also shows that the full optical stack – the waveguide, projector, microdisplay and so on – can now fit within the footprint of a pair of sunglasses.

However, wires are currently required to connect the demonstrator to an external power source and a laptop generating the images. OEMs developing smart glasses will therefore have to bring Lumus’ technology together with additional components (power source, 3D sensors, accelerometers, embedded processors, wireless data communication technologies) in the same footprint to realise a consumer-suited solution.

A Magic Leap video once revealed the capabilities of augmented reality, as well as the many challenges that would need to be overcome to bring it to fruition (Image: Magic Leap)

In the meantime, Lumus believes it already knows how to shrink the footprint of its demonstrator further. For example, the microdisplays it currently uses are based on off-the-shelf liquid crystal on silicon (LCoS) technology. By switching to a custom LCoS display, the firm could reduce the footprint of its demonstrator by a further 20%, however this would cost around $2m to design. An alternative strategy would be to use microLEDs, however this technology is still lagging behind somewhat in maturity, according to Goldman. “We believe once microLEDs do reach the right level of maturity, we could get our form factor even smaller – towards a pair of thinner glasses as opposed to the current form factor,” he said.

Reflective vs diffractive

In terms of the waveguide technologies currently being explored for AR devices, Goldman divided them into two camps: reflective and diffractive. “Almost everyone is in camp diffractive, whereas we are in camp reflective,” he said. Diffractive waveguides couple the light into and out of the waveguide via diffractive gratings (also referred to as holograms), while reflective waveguides instead use embedded, mini, partially reflective mirrors. Lumus favours the latter technology, according to Goldman, due to it being more compact in size while being able to transmit larger fields of view, all while maintaining high brightness across a uniformly coloured image. Another key benefit of reflective waveguides, according to Goldman, is that they offer considerable efficiency advantages that will help prolong the battery life of future AR glasses.

Lumus says its reflective waveguide technology delivers greater efficiency, uniform brightness and colour over a 50-degree field of view (Lumus)

“What we’ve heard from thirdparty sources is that diffractive technologies end up consuming five to 10 times more power than our waveguides,” he said. “This is due to the more complicated path the light has to take in diffractive waveguides, and due to multiple waveguides being required to disassemble and reassemble colours constantly, whereas our technology perfectly reflects the colours throughout the system. In terms of how this affects battery lifetime, we’ve heard that a specific system using diffractive waveguides could only operate for a matter of minutes in daylight – due to the high brightness required to make images visible. Under the same conditions, a system using our waveguide would have lasted for several hours of continual use.”

According to Goldman, optics experts working at tier-1 manufacturers currently using diffractive solutions have said that Lumus’ technology delivers exceptional results in comparison.

Entering the consumer market

Up until a few years ago (pre-Covid), Lumus wasn’t ready to enter the consumer market, while, equally, the consumer market wasn’t ready for its technology, according to Goldman. “Now this market has been really heating up,” he said. “We can see what’s going on behind the scenes and that this really is starting to take off, and so we made the decision to engage with the manufacturing supply chain in a significant way a few years ago to prepare for consumer levels of volume.”

The firm is already in communication with all the consumer electronics and social media firms currently exploring the AR space. “All of them are still at least considering using us as their solution,” said Goldman. “In some cases we already have licensing arrangements.”

Glass manufacturer Schott, for example, has already invested tens of millions of dollars in equipment to become a manufacturing partner of Lumus. Quanta Computer – one of the world’s biggest original design manufacturers and which produces the Apple Watch and Macbook Pro – has also invested considerable resources to become a manufacturing partner. It has set up a production line in Taipei to manufacture Lumus’ full optical stack, while Schott has set one up in Penang, Malaysia, specifically to manufacture its 2D waveguides. “Both can see a path to mass-manufacturing at a reasonable consumer price point,” said Goldman. “We see this ‘reasonable’ price point being when the full optical stack makes up the same percentage of AR glasses’ total cost (bill of materials), as a touch screen does for a smartphone. We are definitely getting there.”

Lumus believes the consumer adoption curve of smart glasses will likely be similar to that of smartwatches. “In the early cases, it wasn’t necessarily obvious what the killer use cases were going to be for the smart watch,” Goldman explained. “At first, only a few hundred thousand smart watches were being sold. However, once OEMs started targeting health monitoring applications and consumers caught on, sales skyrocketed into the millions. This could likely be the same for smart glasses. Once they get into the hands of consumers, the killer applications will become apparent, and sales will soar. As our tagline says, ‘the future is looking up’.

Sponsored: Measuring the real and virtual world – the Prometheus Viewfinder spectrometer in action

A colourful rainbow in the sky, a candle softly lighting up a room or a piece of glass harshly reflecting the sun. Every scene or light source one can imagine can be measured and assessed objectively with colourimetry.

Why would that be relevant? The answer might not be obvious at first sight, but we perceive about 80% of all the information with our visual sense. For many industries and applications it is vital to describe the visual reality in an objective manner. This information is then used to check if the colour of a product produced is in tight tolerances. Or one can check whether a traffic light is neither too bright, nor too dim and is compliant within the regulations. Another typical use case is the creation of a reproduction on a print or display. A spectrometer with viewfinder capabilities can basically cover those and almost any colour measurement task.

Measure what you see

The Prometheus is a highend spectroradiometer with an integrated viewfinder and uses the so-called Pritchard Optics. Here, an objective lens forms an image at an angled mirror, which has a small hole. The light passing through the hole will hit the measurement sensor. The larger part of the mirror reflects the light into a viewfinder. The image displayed in the viewfinder will display the complete scene surrounding the measurement spot, while the measurement spot itself will be displayed as a small black circle.

The measurement angle of the Prometheus Viewfinder Spectrometer is 1.2°, therefore allowing very precise measurements of even small areas. In the case of the Prometheus, the viewfinder is electronic with a built-in camera – similar to a mirrorless camera. This allows not only an easy alignment of the measurement spot, but also the saving of an image of the measurement scene for documentation purposes.

When we talk about colour measurement that of course includes the brightness assessment, or more correctly described as the luminance. But also includes combining several measurements, for example to determine the gamma-value or the colour range, the so-called gamut of a display.

From the real world to a virtual world and to display applications

Almost anything we can see can be measured with the Prometheus Viewfinder Spectrometer. One use case was for the measurement of oddshaped objects such as a pen. For practical reasons, reflective measurements are typically done with dedicated devices with a built-in light source. But the small surface of a finished pen cannot easily be measure dwith typical spectrometers for reflection measurement. So, to ensure accuracy of the client’s brand colour, a viewfinder spectrometer came to rescue. The same company also needed to ensure that advertisements displayed on large LED panels in a football stadium showed the correct brand colours. This is another surprising use case of a viewfinder spectrometer – measuring the display colour over the football field and then checking the displayed colours on the broadcast TV.

Measuring the colour in any real-world scenario is possible. But a more common use case of a viewfinder spectrometer is to measure the colour of extended reality setups. Virtual reality, augmented reality or mixed reality – for an observer it does not make a difference how an image is generated. In the same way, a viewfinder spectrometer does not care about the technology behind it, but allows the objective assessment of any XR set-up. Depending on the actual set-up, custom lenses or attachments might be needed. With the standard Prometheus Viewfinder Spectrometer scenes in a (virtual) distance between 250mm and infinity can be measured.

Last but not least, any kind of traditional display application – such as smartphones, smartwatches, tablets, laptops, TVs, LED banners – is a perfect use case for the Prometheus Viewfinder Spectrometer. Colour accuracy, gamma curves, contrast ratio and even flicker or response time measurements can be covered. During R&D, a quick and precise measurement is invaluable, not only giving the colourimetric tristimulus values, but also the spectral power distribution (SPD) for a better understanding and analysis.

In production settings, a high-end spectroradiometer can be used on its own, for example, to calibrate highly specialised monitor solutions for video and graphic arts or medicine. Or the spectroradiometer can serve as a reference device to establish the calibration of highspeed colourimeters, resulting in a measurement speed that is not only blazingly fast, allowing shortest takt times in production, but at the same time ensuring the highest possible measurement accuracy.

One measurement device to rule them all

It should now be obvious why a viewfinder spectrometer is not only the colour scientist’s favourite and most versatile measurement instrument, but also the perfect solution for R&D tasks and as well suited for process control and quality assessment in the production process. The Prometheus Viewfinder Spectrometer satisfies the highest demands. The high dynamic range, excellent low-light performance, low straylight, polarisation insensitivity and highest wavelength accuracy with builtin monitoring ensure the most accurate colour measurements possible. The sheer number of possibilities, coupled with the highest optical performance, explains why the Prometheus Viewfinder Spectrometer is a measurement device to rule them all.

Further information: www.admesy.com