Whereas ten years ago the limiting factor affecting an imaging system’s performance was largely the resolution of the sensor, the emergence of low-cost, high-resolution sensors has highlighted challenges in optical design.

The standard imaging resolution today is now 4K, or ultra-high definition (UHD), and quickly moving higher (8K+).

Put simply, trying to fit more pixels onto a sensor can be approached in two ways – by making the pixels smaller or the sensor larger. According to Michael Thomas, president of Navitar, a producer of optical solutions, this results in a trade-off between size and image quality: ‘If you want to put [4K resolution] into a cell phone or point-of-view (POV) camera and want your sensor to be compact, this means you have very small pixels to fit 4,000 of them across the sensor. These tiny pixel sizes in mobile devices often present a real signal-to-noise-ratio (SNR) problem.

‘But if you have more flexibility and you care about the best image possible – this normally means high resolution and high dynamic range (DR) – the bigger the pixel the better the SNR,’ Thomas said. ‘For many professional applications, systems engineers want the largest pixels possible. If you take these big pixels and multiply it by 4,000 across, you end up with a very large sensor.’

Big application, big sensor

For industrial and machine vision applications that require higher sensitivity, contrast and dynamic range than consumer cameras, a 4.5µm pixel is a fairly standard size. Whereas in the past, standard sensor sizes for machine vision used to be 1/3 inch, 1/2 inch, 2/3 inch, and one inch, lens sizes can now be found in formats that are above one inch.

Writing in Electro Optics’ sister title, Imaging and Machine Vision Europe, Greg Hollows, director of the Imaging Business Unit at Edmund Optics, commented that ‘with all the new sensor formats on or now entering the market, there is limited off-the-shelf product coverage for them and, in many cases, larger sensors will require larger lenses that are going to be more expensive.’

One of the mistakes customers can make when buying camera systems is choosing the highest possible sensor resolution without taking into consideration the cost of the lens. Customers have to bear in mind that the quality of the image is only as good as that provided by the lens.

Thomas at Navitar remarked: ‘It is true that in machine vision, engineers are willing to spend thousands on new large format, HDR sensors and think that a $50 lens out of a catalogue can provide the right balance of resolution and DR and athermal performance,’ he said.

‘In many cases, this is not the case unless the system has been specifically designed to take advantage of these new sensors,’ Thomas continued, adding that Navitar’s Resolv4K is a good example of an off-the-shelf product that was built from scratch to take advantage of the large sensor format migration to obtain more throughput.

‘One of the great optical scientists, Douglas Goodman, used to say: “Don’t put a $100 lens on a million dollar instrument”. It was good advice then, and it’s good advice now,’ he said.

Custom designs

Many lens suppliers including Navitar and Edmund Optics produce custom lenses for high-resolution imaging systems with larger sensor sizes. Often the higher the resolution, the greater the number of optical components required in the system to correct for residual aberrations. If not corrected, aberrations will produce colour fringing or images that are never fully in focus. In order to deal with these aberrations, optical designers need to have more surfaces, air spaces and different materials within the lens design to create more degrees of freedom, which allow designers to correct and control residual aberrations and enable the system to transmit a high resolution image to the sensor.

There are various different types of glass used for colour correction and to counteract aberrations. However, as resolutions go up this becomes more challenging, because the level of correction increases with the level of resolution. Typically, calcium fluoride glasses are used to achieve high resolutions because of their low dispersion properties.

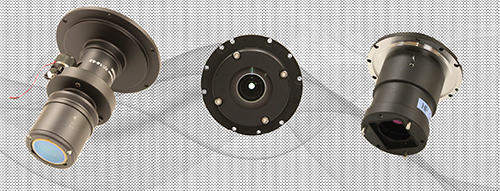

Navitar's 4K HDR wide-angle lenses are environmentally robust and perform from -20°C to 80°C

In addition to high-resolution systems, custom lenses are also being designed for emerging machine vision applications, where off-the-shelf lenses don’t exist yet. ‘In areas such as robotics or autonomous vehicles and unmanned aerial vehicles (UAVs), there is a need for very ruggedised, high dynamic range (HDR) lenses that are also athermal,’ said Thomas.

Asphere lenses are a suitable choice for these applications, Thomas added, as they allow for more freedom in the optical design to correct higher-order aberrations and to make the systems more compact. ‘Aspherical surfaces in general just allow the designer more variables to correct image error. We don’t need these in our lower NA inspection systems, but it’s critical in our vehicle or drone mounted lenses,’ Thomas said.

Although not many off-the-shelf lenses exist for cameras being used in these types of extreme environments, manufacturers are working to change this. ‘We’re doing a lot of new product development for advanced application lenses, which will eventually catch on and become more off-the-shelf,’ Thomas remarked.

He added that a lot of time is spent making sure that tolerance designs predict a high enough yield that the company can build the lens profitably in production. ‘There are many lenses we design that we don’t end up releasing because the tolerance analysis finds that our yields will just be too poor,’ Thomas said. ‘So, on the one hand we’re pushing new ground in the optical design space, but at the same time we’re balancing that with very vigorous tolerance analysis to ensure the lenses are producible and stable and eventually can be passed on to our customers at a reasonable cost.’

Hollows at Edmund Optics noted in the article for Imaging and Machine Vision Europe that the machine vision sector, in particular, needs to develop its own optics solutions for the problem of larger sensor sizes. ‘As sensors grow in size and we push out of the C-mount, simply using what is available for photography is not the correct path. While this can be viewed as an efficient fix, it is too large of a jump for what is actually needed and will greatly limit the design tools available for optical designers, along with creating expensive oversized products. Moving to logical solutions that may be very specific to the machine vision industry has to be part of the solution, even if it initially takes longer development time.

‘At the end of the day, we have arrived at the inevitable outcome that could be seen years ago: eventually one technology – in this case sensor technology – would surpass the abilities and availability of other core imaging technologies,’ he continued. ‘Out of convenience the [machine vision] industry has leveraged other application spaces to solve the limitations. Now more than ever before, the imaging community needs to work together to create the solution spaces for the market. If we do not, someone else will do it for us.’

For applications such as night surveillance, astronomy, or food quality assurance, near-infrared cutoff filters are necessary for high resolution image sensors and align the sensor’s over-interpretation of red light to a human’s perception, by reducing the amount of red or infrared light waves.

‘Colour is not a physical property of matter, instead colour is generated by our brain,’ said Dr Ralf Biertümpfel, product manager of optical filters at Schott Advanced Optics, which produce filters for consumer and industrial applications. ‘For example: take a “white” paper, go into a dark room and illuminate it with red light while looking at it. Thus, the paper is only white to our brain, when it is illuminated in the correct way.’

All colour cameras have colour pixels that are very different from the sensors inside of a human eye, Biertümpfel added. ‘But the goal is to come as close as possible to the human eye.’

Digital CCD and CMOS sensors can detect visible light and near-infrared radiation, which causes false colours. ‘The effect of a NIR cut filter works in three dimensions: first, the NIR cut filter takes away the NIR radiation; second, red light and NIR radiation are absorbed; and third, the digital sensor works the same as a human eye, the colour vision is aligned to human perception.’